Editor’s Note

Please note I am putting all my currently active development and latest updates into this newer post: Raspberry Pi photo frame using your pictures on your Google Drive II

Intro

All my spouse’s digital photo frames are either broken or nearly broken – probably she got them from garage sales. Regardless, they spend 99% of the the time black. Now, since I had bought that Raspberry Pi PiDisplay awhile back, and it is underutilized, and I know a thing or two about linux, I felt I could create a custom photo frame with things I already have lying around – a Raspberry Pi 3, a PiDisplay, and my personal Google Drive. We make a point to copy all our cameras’ pictures onto the Google Drive, which we do the old-fashioned, by-hand way. After 17 years of digital photos we have about 40,000 of them, over 200 GB.

So I also felt obliged to create features you will never have in a commercial product, to make the effort worthwhile. I thought, what about randomly picking a few for display from amongst all the pictures, displaying that subset for a few days, and then moving on to a new randomly selected sample of images, etc? That should produce a nice review of all of them over time, eventually. You need an approach like that because you will never get to the end if you just try to display 40000 images in order!

Equipment

This work was done on a Raspberry Pi 3 running Raspbian Lite (more on that later). I used a display custom-built for the RPi, Amazon.com: Raspberry Pi 7″ Touch Screen Display: Electronics), though I believe any HDMI display would do.

The scripts

Here is the master file which I call master.sh.

#!/bin/sh

# DrJ 8/2019

# call this from cron once a day to refesh random slideshow once a day

RANFILE=”random.list”

NUMFOLDERS=20

DISPLAYFOLDER=”/home/pi/Pictures”

DISPLAYFOLDERTMP=”/home/pi/Picturestmp”

SLEEPINTERVAL=3

DEBUG=1

STARTFOLDER=”MaryDocs/Pictures and videos”

echo “Starting master process at “`date`

rm -rf $DISPLAYFOLDERTMP

mkdir $DISPLAYFOLDERTMP

#listing of all Google drive files starting from the picture root

if [ $DEBUG -eq 1 ]; then echo Listing all files from Google drive; fi

rclone ls remote:”$STARTFOLDER” > files

# filter down to only jpegs, lose the docs folders

if [ $DEBUG -eq 1 ]; then echo Picking out the JPEGs; fi

egrep ‘\.[jJ][pP][eE]?[gG]$’ files |awk ‘$1 > 11000 {$1=””; print substr($0,2)}’|grep -i -v /docs/ > jpegs.list

# throw NUMFOLDERS or so random numbers for picture selection, select triplets of photos by putting

# names into a file

if [ $DEBUG -eq 1 ]; then echo Generate random filename triplets; fi

./random-files.pl -f $NUMFOLDERS -j jpegs.list -r $RANFILE

# copy over these 60 jpegs

if [ $DEBUG -eq 1 ]; then echo Copy over these random files; fi

cat $RANFILE|while read line; do

rclone copy remote:”${STARTFOLDER}/$line” $DISPLAYFOLDERTMP

sleep $SLEEPINTERVAL

done

# rotate pics as needed

if [ $DEBUG -eq 1 ]; then echo Rotate the pics which need it; fi

cd $DISPLAYFOLDERTMP; ~/rotate-as-needed.sh

cd ~

# kill any qiv slideshow

if [ $DEBUG -eq 1 ]; then echo Killing old qiv and fbi slideshow; fi

pkill -9 -f qiv

sudo pkill -9 -f fbi

pkill -9 -f m2.pl

# remove old pics

if [ $DEBUG -eq 1 ]; then echo Removing old pictures; fi

rm -rf $DISPLAYFOLDER

mv $DISPLAYFOLDERTMP $DISPLAYFOLDER

#run looping fbi slideshow on these pictures

if [ $DEBUG -eq 1 ]; then echo Start fbi slideshow in background; fi

cd $DISPLAYFOLDER ; nohup ~/m2.pl >> ~/m2.log 2>&1 &

if [ $DEBUG -eq 1 ]; then echo “And now it is “`date`; fi

I call the following script random-files.pl:

#!/usr/bin/perl

use Getopt::Std;

my %opt=();

getopts("c:df:j:r:",\%opt);

$nofolders = $opt{f} ? $opt{f} : 20;

$DEBUG = $opt{d} ? 1 : 0;

$cutoff = $opt{c} ? $opt{c} : 5;

$cutoffS = 60*$cutoff;

$jpegs = $opt{j} ? $opt{j} : "jpegs.list";

$ranpicfile = $opt{r} ? $opt{r} : "jpegs-random.list";

print "d,f,j,r: $opt{d}, $opt{f}, $opt{j}, $opt{r}\n" if $DEBUG;

open(JPEGS,$jpegs) || die "Cannot open jpegs listing file $jpegs!!\n";

@jpegs = ;

# remove newline character

$nopics = chomp @jpegs;

open(RAN,"> $ranpicfile") || die "Cannot open random picture file $ranpicfile!!\n";

for($i=0;$i<$nofolders;$i++) {

$t = int(rand($nopics-2));

print "random number is: $t\n" if $DEBUG;

# a lot of our pics follow this naming convention

# 20160831_090658.jpg

($date,$time) = $jpegs[$t] =~ /(\d{8})_(\d{6})/;

if ($date) {

print "date, time: $date $time\n" if $DEBUG;

# ensure neighboring picture is at least five minutes different in time

$iPO = $iP = $diff = 0;

($hr,$min,$sec) = $time =~ /(\d\d)(\d\d)(\d\d)/;

$secs = 3600*$hr + 60*$min + $sec;

print "Pre-pic logic\n";

while ($diff < $cutoffS) {

$iP++;

$priorPic = $jpegs[$t-$iP];

$Pdate = $Ptime = 0;

($Pdate,$Ptime) = $priorPic =~ /(\d{8})_(\d{6})/;

($Phr,$Pmin,$Psec) = $Ptime =~ /(\d\d)(\d\d)(\d\d)/;

$Psecs = 3600*$Phr + 60*$Pmin + $Psec;

print "hr,min,sec,Phr,Pmin,Psec: $hr,$min,$sec,$Phr,$Pmin,$Psec\n" if $DEBUG;

$diff = abs($secs - $Psecs);

print "diff: $diff\n" if $DEBUG;

# end our search if we happened upon different dates

$diff = 99999 if $Pdate ne $date;

}

# post-picture logic - same as pre-picture

print "Post-pic logic\n";

$diff = 0;

while ($diff < $cutoffS) {

$iPO++;

$postPic = $jpegs[$t+$iPO];

$Pdate = $Ptime = 0;

($Pdate,$Ptime) = $postPic =~ /(\d{8})_(\d{6})/;

($Phr,$Pmin,$Psec) = $Ptime =~ /(\d\d)(\d\d)(\d\d)/;

$Psecs = 3600*$Phr + 60*$Pmin + $Psec;

print "hr,min,sec,Phr,Pmin,Psec: $hr,$min,$sec,$Phr,$Pmin,$Psec\n" if $DEBUG;

$diff = abs($Psecs - $secs);

print "diff: $diff\n" if $DEBUG;

# end our search if we happened upon different dates

$diff = 99999 if $Pdate ne $date;

}

} else {

$iP = $iPO = 2;

}

$priorPic = $jpegs[$t-$iP];

$Pic = $jpegs[$t];

$postPic = $jpegs[$t+$iPO];

print RAN qq($priorPic

$Pic

$postPic

);

}

close(RAN);

Bunch of simple python scripts

I call this one getinfo.py:

#!/usr/bin/python3

import os,sys

from PIL import Image

from PIL.ExifTags import TAGS

for (tag,value) in Image.open(sys.argv[1])._getexif().items():

print (‘%s = %s’ % (TAGS.get(tag), value))

print (‘%s = %s’ % (TAGS.get(tag), value))

And here’s rotate.py:

#!/usr/bin/python3

import PIL, os

import sys

from PIL import Image

picture= Image.open(sys.argv[1])

# if orientation is 6, rotate clockwise 90 degrees

picture.rotate(-90,expand=True).save(“rot_” + sys.argv[1])

While here is rotatecc.py:

#!/usr/bin/python3

import PIL, os

import sys

from PIL import Image

picture= Image.open(sys.argv[1])

# if orientation is 8, rotate counterclockwise 90 degrees

picture.rotate(90,expand=True).save(“rot_” + sys.argv[1])

And rotate-as-needed.sh:

#!/bin/sh

# DrJ 12/2020

# some of our downloaded files will be sideways, and fbi doesn’t auto-rotate them as far as I know

# assumption is that are current directory is the one where we want to alter files

ls -1|while read line; do

echo fileis “$line”

o=`~/getinfo.py “$line”|grep -ai orientation|awk ‘{print $NF}’`

echo orientation is $o

if [ “$o” -eq “6” ]; then

echo “90 clockwise is needed, o is $o”

# rotate and move it

~/rotate.py “$line”

mv rot_”$line” “$line”

elif [ “$o” -eq “8” ]; then

echo “90 counterclock is needed, o is $o”

# rotate and move it

~/rotatecc.py “$line”

mv rot_”$line” “$line”

fi

don

And finally, m2.pl:

#!/usr/bin/perl

# show the pics ; rotate the screen as needed

# for now, assume the display is in a neutral

# orientation at the start

use Time::HiRes qw(usleep);

$DEBUG = 1;

$delay = 6; # seconds between pics

$mdelay = 200; # milliseconds

$mshow = "$ENV{HOME}/mediashow";

$pNames = "$ENV{HOME}/pNames";

# pics are here

$picsDir = "$ENV{HOME}/Pictures";

chdir($picsDir);

system("ls -1 > $pNames");

# forther massage names

open(TMP,"$pNames");

@lines = ;

foreach (@lines) {

chomp;

$filesNullSeparated .= $_ . "\0";

}

open(MS,">$mshow") || die "Cannot open mediashow file $mshow!!\n";

print MS $filesNullSeparated;

close(MS);

print "filesNullSeparated: $filesNullSeparated\n" if $DEBUG;

$cn = @lines;

print "$cn files\n" if $DEBUG;

# throw up a first picture - all black. Trick to make black bckgrd permanent

system("sudo fbi -a --noverbose -T 1 $ENV{HOME}/black.jpg");

system("sudo fbi -a --noverbose -T 1 $ENV{HOME}/black.jpg");

sleep(1);

system("sleep 2; sudo killall fbi");

# start infinitely looping fbi slideshow

for (;;) {

# then start slide show

# shell echo cannot work with null character so we need to use a file to store it

#system("cat $picNames|xargs -0 qiv -DfRsmi -d $delay \&");

system("sudo xargs -a $mshow -0 fbi -a --noverbose -1 -T 1 -t $delay ");

# fbi runs in background, then exits, so we need to monitor if it's still alive

# wait appropriate estimated amount of time, then look aggressively for fbi

sleep($delay*($cn - 2));

for(;;) {

open(MON,"ps -ef|grep fbi|grep -v grep|") || die "Cannot launch ps -ef!!\n";

$match = ;

if ($match) {

print "got fbi match\n" if $DEBUG > 1;

} else {

print "no fbi match\n" if $DEBUG;

# fbi not found

last;

}

close(MON);

print "usleeping, noexist is $noexit\n" if $DEBUG > 1;

usleep($mdelay);

} # end loop testing if fbi has exited

} # close of infinite loop

You’ll need to make these files executable. Something like this should work:

$ chmod +x *.py *.pl *.sh

My crontab file looks like this (you edit crontab using the crontab -e command):

@reboot sleep 25; cd ~ ; ./m2.pl >> ./m2.log 2>&1 24 16 * * * ./master.sh >> ./master.log 2>&1

This invokes master.sh once a day at 4:24 PM to refresh the 60 photos. My refresh took about 13 minutes the other day, but the old slideshow keeps playing until almost the last second, so it’s OK.

The nice thing about this approach is that fbi works with a lightweight OS – Raspbian Lite is fine, you’ll just need to install a few packages. My SD card is unstable or something, so I have to re-install the OS periodically. An install of Raspberry Pi Lite on my RPi 4 took 11 minutes. Anyway, fbi is installed via:

$ sudo apt-get install fbi

But if your RPi is freshly installed, you may first need to do a

$ sudo apt-get update && sudo apt-get upgrade

python image manipulation

The drawback of this approach, i.e., not using qiv, is that we gotta do some image manipulation, for which python is the best candidate. I’m going by memory. I believe I installed python3, perhaps as sudo apt-get install python3. Then I needed pip3: sudo apt-get install python3-pip. Then I needed to install Pillow using pip3: sudo pip3 install Pillow.

m2.pl refers to a black.jpg file. It’s not a disaster to not have that, but under some circumstances it may help. ![]() There it is!

There it is!

Many of my photos do not have EXIF information, yet they can still be displayed. So for those photos running getinfo.py will produce an error (but the processing of the other photos will continue.)

I was originally rotating the display 90 degrees as needed to display the photos with the using the maximum amount of display real estate. But that all broke when I tried to revive it. And the cheap servo motor was noisy. But folks were pretty impressed when I demoed it, because I did it get it the point where it was indeed working correctly.

Picture selection methodology

There are 20 “folders” (random numbers) of three triplets each. The idea is to give you additional context to help jog your memory. The triplets, with some luck, will often be from the same time period.

I observed how many similar pictures are adjacent to each other amongst our total collection. To avoid identical pictures, I require the pictures to be five minutes apart in time. Well, I cheated. I don’t pull out the timestamp from the EXIF data as I should (at least not yet – future enhancement, perhaps). But I rely on a file-naming convention I notice is common – 20201227_134508.jpg, which basically is a timestamp-encoded name. The last six digits are HHMMSS in case it isn’t clear.

Rclone

You must install the rclone package, sudo apt-get install rclone.

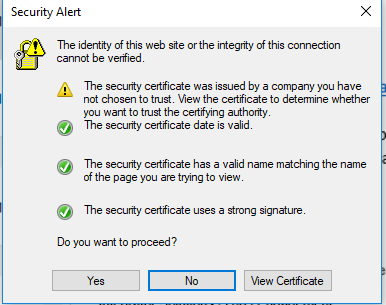

Can you configure rclone on a headless Raspberry Pi?

Indeed you can. I know because I just did it. You enable your Pi for ssh access. Do the rclone config using putty from a Windows 10 system. You’ll get a long Google URL in the course of configuring that you can paste into your browser. You verify it’s you, log into your Google account. Then you get back a url like http://127.0.0.1:5462/another-long-url-string. Well, put that url into your clipboard and in another login window, enter curl clipboard_contents

That’s what I did, not certain it would work, but I saw it go through in my rclone-config window, and that was that!

Don’t want to deal with rclone?

So you want to use a traditional flash drive you plug in to a USB port, just like you have for the commerical photo frames, but you otherwise like my approach of randomizing the picture selection each day? I’m sure that is possible. A mid-level linux person could rip out the rclone stuff I have embedded and replace as needed with filesystem commands. I’m imagining a colossal flash drive with all your tens of thousands of pictures on it where my random selection still adds value. If this post becomes popular enough perhapsI will post exactly how to do it.

Getting started with this

After you’ve done all that, and want to try it out. you can run

$ ./master.sh

First you should see a file called files growing in size – that’s rclone doing its listing. That takes a few minutes. Then it generates random numbers for photo selection – that’s very fast, maybe a second. Then it slowly copies over the selected images to a temporary folder called Picturestmp. That’s the slowest part. If you do a directory listing you should see the number of images in that directory growing slowly, adding maybe three per minute until it reaches 60 of them. Finally the rotation are applied. But even if you didn’t set up your python environment correctly, it doesn’t crash. It effectively skips the rotations. A rotation takes a couple seconds per image. Finally all the images are copied over to the production area, the directory called Pictures; the old slideshow program is “killed,” and the new slideshow starts up. Whole process takes around 15 minutes.

I highly recommend running master.sh by hand as just described to make sure it all works. Probably some of it won’t. I don’t specialize in making recipes, more just guidance. But if you’re feeling really bold you can just power it up and wait a day (because initially you won’t have any pictures in your slideshow) and pray that it all works.

Tip: Undervoltage thunderbolt suppression

This is one of those topics where you’ll find a lot on the Internet, but little about what we need to do: How do we stop that thunderbolt that appears in the upper right corner from appearing?? First, the boilerplate warning. That thingy appears when you’re not delivering enough voltage. That condition can harm your SD Card, blah, blah. I’ve blown up a few SD cards myself. But, in practice, with my RPi 3, I’ve been running it with the Pi Display for 18 months with no mishaps. So, some on, let’s get crazy and suppress the darn thing. So… here goes. To suppress that yellow stroke of lightning, add these lines to your /boot/config.txt:

# suppress undervoltage thunderbolt – DrJ 8/21

# see http://rpf.io/configtxt

avoid_warnings=1

For good measure, if you are not using the HDMI port, you can save some energy by disabling HDMI:

$ tvservice -o

Still missing

I’d like to display a transition image when switching from the current set of photos to the new ones.

Suppressing boot up messages might be nice for some. Personally I think they’re kind of cool – makes it look like you’ve done a lot more techie work than you actually have!

You’re going to get some junk images. I’ve seen where an image is a thumbnail (I guess) and gets blown up full screen so that you see these giant blocks of pixels. I could perhaps magnify those kind of images less.

Movies are going to be tricky so let’s not even go there…

I was thinking about making it a navigation-enabled photo frame, such as integration with a Gameboy controller. You could do some really awesome stuff: Pause this picture; display the location (town or city) where this photo was taken; refresh the slideshow. It sounds fantastical, but I don’t think it’s beyond the capability of even modestly capable hobbyist programmers such as myself.

I may still spin the frame 90 degrees this way an that. I have the servo mounted and ready. Just got to revive the control commands for it.

Appendix 1: rclone configuration

This is my actual rclone configuration session from January 2022.

rclone config

2022/01/17 19:45:36 NOTICE: Config file "/home/pi/.config/rclone/rclone.conf" not found - using defaults

No remotes found - make a new one

n) New remote

s) Set configuration password

q) Quit config

n/s/q> n

name> remote

Type of storage to configure.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / 1Fichier

\ "fichier"

2 / Alias for an existing remote

\ "alias"

3 / Amazon Drive

\ "amazon cloud drive"

4 / Amazon S3 Compliant Storage Provider (AWS, Alibaba, Ceph, Digital Ocean, Dreamhost, IBM COS, Minio, Tencent COS, etc)

\ "s3"

5 / Backblaze B2

\ "b2"

6 / Box

\ "box"

7 / Cache a remote

\ "cache"

8 / Citrix Sharefile

\ "sharefile"

9 / Dropbox

\ "dropbox"

10 / Encrypt/Decrypt a remote

\ "crypt"

11 / FTP Connection

\ "ftp"

12 / Google Cloud Storage (this is not Google Drive)

\ "google cloud storage"

13 / Google Drive

\ "drive"

14 / Google Photos

\ "google photos"

15 / Hubic

\ "hubic"

16 / In memory object storage system.

\ "memory"

17 / Jottacloud

\ "jottacloud"

18 / Koofr

\ "koofr"

19 / Local Disk

\ "local"

20 / Mail.ru Cloud

\ "mailru"

21 / Microsoft Azure Blob Storage

\ "azureblob"

22 / Microsoft OneDrive

\ "onedrive"

23 / OpenDrive

\ "opendrive"

24 / OpenStack Swift (Rackspace Cloud Files, Memset Memstore, OVH)

\ "swift"

25 / Pcloud

\ "pcloud"

26 / Put.io

\ "putio"

27 / SSH/SFTP Connection

\ "sftp"

28 / Sugarsync

\ "sugarsync"

29 / Transparently chunk/split large files

\ "chunker"

30 / Union merges the contents of several upstream fs

\ "union"

31 / Webdav

\ "webdav"

32 / Yandex Disk

\ "yandex"

33 / http Connection

\ "http"

34 / premiumize.me

\ "premiumizeme"

35 / seafile

\ "seafile"

Storage> 13

** See help for drive backend at: https://rclone.org/drive/ **

Google Application Client Id

Setting your own is recommended.

See https://rclone.org/drive/#making-your-own-client-id for how to create your own.

If you leave this blank, it will use an internal key which is low performance.

Enter a string value. Press Enter for the default ("").

client_id>

OAuth Client Secret

Leave blank normally.

Enter a string value. Press Enter for the default ("").

client_secret>

Scope that rclone should use when requesting access from drive.

Enter a string value. Press Enter for the default ("").

Choose a number from below, or type in your own value

1 / Full access all files, excluding Application Data Folder.

\ "drive"

2 / Read-only access to file metadata and file contents.

\ "drive.readonly"

/ Access to files created by rclone only.

3 | These are visible in the drive website.

| File authorization is revoked when the user deauthorizes the app.

\ "drive.file"

/ Allows read and write access to the Application Data folder.

4 | This is not visible in the drive website.

\ "drive.appfolder"

/ Allows read-only access to file metadata but

5 | does not allow any access to read or download file content.

\ "drive.metadata.readonly"

scope> 2

ID of the root folder

Leave blank normally.

Fill in to access "Computers" folders (see docs), or for rclone to use

a non root folder as its starting point.

Enter a string value. Press Enter for the default ("").

root_folder_id>

Service Account Credentials JSON file path

Leave blank normally.

Needed only if you want use SA instead of interactive login.

Leading ~ will be expanded in the file name as will environment variables such as ${RCLONE_CONFIG_DIR}.

Enter a string value. Press Enter for the default ("").

service_account_file>

Edit advanced config? (y/n)

y) Yes

n) No (default)

y/n>

Remote config

Use auto config?

Say Y if not sure

Say N if you are working on a remote or headless machine

y) Yes (default)

n) No

y/n> N

Please go to the following link: https://accounts.google.com/o/oauth2/auth?access_type=offline&client_id=202264815644.apps.googleusercontent.com&redirect_uri=urn%3Aietf%3Awg%3Aoauth%3A2.0%3Aoob&response_type=code&scope=https%3A%2F%2Fwww.googleapis.com%2Fauth%2Fdrive.readonly&state=2K-WjadN98dzSlx3rYOvUA

Log in and authorize rclone for access

Enter verification code> 4/1AX4XfWirusA-gk55nbbEJb8ZU9d_CKx6aPrGQvDJzybeVR9LOWOKtw_c73U

Configure this as a team drive?

y) Yes

n) No (default)

y/n>

[remote]

scope = drive.readonly

token = {"access_token":"ALTEREDARrdaM_TjUIeoKHuEMWCz_llH0DXafWh92qhGy4cYdVZtUv6KcwZYkn4Wmu8g_9hPLNnF1Kg9xoioY4F1ms7i6ZkyFnMxvBcZDaEwEs2CMxjRXpOq2UXtWmqArv2hmfM9VbgtD2myUGTfLkIRlMIIpiovH9d","token_type":"Bearer","refresh_token":"1//0dKDqFMvn3um4CgYIARAAGA0SNwF-L9Iro_UU5LfADTn0K5B61daPaZeDT2gu_0GO4DPP50QoxE65lUi4p7fgQUAbz8P5l_Rcc8I","expiry":"2022-01-17T20:50:38.944524945Z"}

y) Yes this is OK (default)

e) Edit this remote

d) Delete this remote

y/e/d> y

Current remotes:

Name Type

==== ====

remote drive

e) Edit existing remote

n) New remote

d) Delete remote

r) Rename remote

c) Copy remote

s) Set configuration password

q) Quit config

e/n/d/r/c/s/q> q

pi@raspberrypi:~ $

References and related

This 7″ display is a little small, but it’s great to get you started. It’s $64 at Amazon: Amazon.com: Raspberry Pi 7″ Touch Screen Display: Electronics

Is your Pi Display mentioned above blanking out after a few seconds? I have just the solution in this post.

I have an older approach using qiv which I lost the files for, and my blog post got corrupted. Hence this new approach.

In this slightly more sophisticated approach, I make a greater effort to separate the photos in time. But I also make a whole bunch of other improvements as well. But it’s a lot more files so it may only be appropriate for a more seasoned RPi command-line user.

My advanced slideshow treatment is beginning to take shape. I just add to it while I develop it, so check it periodically if that is of interest. Raspberry Pi advanced photo frame.