Intro

I have previously written how to copy all Azure DevOps (ADO) logs to a linux server. In this post I share a script I wrote which does a quality check on all the most recent pipeline runs. If there are any issues, a message is sent to a MS teams channel.

Let’s get into the details.

Preliminary details

I am using the api, needless to say. I cannot say I have mastered the api or even come close to understanding it. I however have leveraged the same api call I have previously used since I observed it contains a lot of interesting data.

conf_check_all.ini

This config file is written as json to make importing a breeze. You set up optional trigger conditions for the various pipeline runs you will have, or even whether or not to perform any checks on it at all.

{

"organization":"drjohns4ServicesCoreSystems",

"project":"Connectivity",

"url_base":"https://dev.azure.com/",

"url_params":"&api-version=7.1-preview.7",

"test_flag":false,

"run_ct_min":2,

"queue_time_max":1800,

"pipelines":{

"comment":{"maximum_processing_time_in_seconds":"integer_value","minimum_processing_time_in_seconds":"integer_value","(optional) check_flag - to potentially disable the checks for this pipeline":"either true or false"},

"default":{"max_proc_time":1800,"min_proc_time":3,"check_flag":true},

"feed_influxdb":{"max_proc_time":180,"min_proc_time":3},

"PAN-Usage4Mgrs":{"max_proc_time":900,"min_proc_time":60,"check_flag":true},

"PAN-Usage4Mgrs-2":{"max_proc_time":900,"min_proc_time":60},

"speed-up-sampling":{"max_proc_time":900,"min_proc_time":"2","check_flag":false},

"Pipeline_check":{"max_proc_time":45,"min_proc_time":"2","check_flag":false},

"Discover new vEdges":{"max_proc_time":3600,"min_proc_time":3,"check_flag":true},

}

}

So you see at the bottom is a dictionary where the keys are the names of the pipelines I am running, plus a default entry.

check_all_pipelines.py

#!/usr/bin/python3

# fetch raw log to local machine

# for relevant api section, see:

#https://learn.microsoft.com/en-us/rest/api/azure/devops/build/builds/get-build-log?view=azure-devops-rest-7.1

import urllib.request,json,sys,os

from datetime import datetime,timedelta

from modules import aux_modules

conf_file = sys.argv[1]

# pipeline uses UTC so we must follow suit or we will miss files

#a_day_ago = (datetime.utcnow() - timedelta(days = 1)).strftime('%Y-%m-%dT%H:%M:%SZ')

startup_delay = 30 # rough time in seconds before the pipeline even begins to execute our script

an_hour_ago = (datetime.utcnow() - timedelta(hours = 1, seconds = startup_delay)).strftime('%Y-%m-%dT%H:%M:%SZ')

print('An hour ago was (UTC)',an_hour_ago)

format = '%Y-%m-%dT%H:%M:%SZ'

#url = 'https://dev.azure.com/drjohns4ServicesCoreSystems/Connectivity/_apis/build/builds?minTime=2022-10-11T13:00:00Z&api-version=7.1-preview.7'

# dump config file into a dict

config_d = aux_modules.parse_config(conf_file)

test_flag = config_d['test_flag']

if test_flag:

print('config_d',config_d)

print('We are in a testing mode because test_flag is:',test_flag)

url_base = f"{config_d['url_base']}{config_d['organization']}/{config_d['project']}/_apis/build/builds"

url = f"{url_base}?minTime={an_hour_ago}{config_d['url_params']}"

#print('url',url)

req = urllib.request.Request(url)

req.add_header('Authorization', 'Basic ' + os.environ['ADO_AUTH'])

# Get buildIds for pipeline runs from last 1 hour

with urllib.request.urlopen(req) as response:

html = response.read()

txt_d = json.loads(html)

#{"count":215,"value":[{"id":xxx, "buildNumber":"20230203.107","status":"completed","result":"succeeded","queueTime":"2023-02-03T21:12:01.0865046Z","startTime":"2023-02-03T21:12:05.2177605Z","finishTime":"2023-02-03T21:17:28.1523128Z","definition":{"name":"PAN-Usage4Mgrs-2"

value_l = txt_d['value']

all_msgs = ''

header_msg = '**Recent pipeline issues**\n'

# check for too few pipeline runs

if len(value_l) <= config_d['run_ct_min']:

all_msgs = f"There have been fewer than expected pipeline runs this past hour. Greater than **{config_d['run_ct_min']}** runs are expected, but there have been only **{len(value_l)}** runs. \nSeomthing may be wrong. \n"

for builds in value_l:

msg = aux_modules.check_this_build(builds,config_d,url_base)

if msg: all_msgs = f"{all_msgs} \n{msg} \n"

if all_msgs:

if not test_flag: aux_modules.sendMessageToTeams(header_msg + all_msgs) # send to WebHook if not in a testing mode

print(header_msg + all_msgs)

else:

print('No recent pipeline errors')

Short explanation

I consider the code to be mostly self-explanatory. A cool thing I’m trying out here is the f- format specifier to write to a string kind of like sprintf. I run this script every hour from, yes, an ADO pipeline! But since this job looks for errors, including errors which indicate a systemic problem with the agent pool, I run it from a different agent pool.

aux_modules.py

import json,re

import os,urllib.request

from datetime import datetime,timedelta

import pymsteams

def parse_config(conf_file):

# config file should be a json file

f = open(conf_file)

config_d = json.load(f)

f.close()

return config_d

def get_this_log(config_d,name,buildId,build_number):

# leaving out the api-version etc works better

#GET https://dev.azure.com/{organization}/{project}/_apis/build/builds/{buildId}/logs/{logId}?api-version=7.1-preview.2

#https://dev.azure.com/drjohns4ServicesCoreSystems/d6338e-f5b4-45-6c-7b3a86/_apis/build/builds/44071/logs/7'

buildId_s = str(buildId)

log_name = config_d['log_dir'] + "/" + name + "-" + build_number

# check if we already got this one

if os.path.exists(log_name):

return

#url = url_base + organization + '/' + project + '/_apis/build/builds/' + buildId_s + '/logs/' + logId + '?' + url_params

url = config_d['url_base'] + config_d['organization'] + '/' + config_d['project'] + '/_apis/build/builds/' + buildId_s + '/logs/' + config_d['logId']

print('url for this log',url)

req = urllib.request.Request(url)

req.add_header('Authorization', 'Basic ' + config_d['auth'])

with urllib.request.urlopen(req) as response:

html = response.read()

#print('log',html)

print("Getting (name,build_number,buildId,logId) ",name,build_number,buildId_s,config_d['logId'])

f = open(log_name,"wb")

f.write(html)

f.close()

def check_this_build(builds,config_d,url_base):

format = '%Y-%m-%dT%H:%M:%SZ'

buildId = builds['id']

build_number = builds['buildNumber']

status = builds['status'] # normally: completed

result = builds['result'] # normally: succeeded

queueTime = builds['queueTime']

startTime = builds['startTime']

finishTime = builds['finishTime']

build_def = builds['definition']

name = build_def['name']

print('name,build_number,id',name,build_number,buildId)

print('status,result,queueTime,startTime,finishTime',status,result,queueTime,startTime,finishTime)

qTime = re.sub(r'\.\d+','',queueTime)

fTime = re.sub(r'\.\d+','',finishTime)

sTime = re.sub(r'\.\d+','',startTime)

qt_o = datetime.strptime(qTime, format)

ft_o = datetime.strptime(fTime, format)

st_o = datetime.strptime(sTime, format)

duration_o = ft_o - st_o

duration = int(duration_o.total_seconds())

print('duration',duration)

queued_time_o = st_o - qt_o

queued_time = int(queued_time_o.total_seconds())

queue_time_max = config_d['queue_time_max']

# and from the config file we have...

pipes_d = config_d['pipelines']

this_pipe = pipes_d['default']

if name in pipes_d: this_pipe = pipes_d[name]

msg = ''

if 'check_flag' in this_pipe:

if not this_pipe['check_flag']:

print('Checking for this pipeline has been disabled: ',name)

return msg # skip this build if in test mode or whatever

print('duration,min_proc_time,max_proc_time',duration,this_pipe['min_proc_time'],this_pipe['max_proc_time'])

print('queued_time,queue_time_max',queued_time,queue_time_max)

if duration > this_pipe['max_proc_time'] or duration < this_pipe['min_proc_time']:

msg = f"ADO Pipeline **{name}** run is outside of expected time range. Build number: **{build_number}**. \n Duration, max_proc_time, min_proc_time: **{duration},{this_pipe['max_proc_time']},{this_pipe['min_proc_time']}**"

if not status == 'completed' or not result == 'succeeded':

msg = f"ADO Pipeline **{name}** run has unexpected status or result. Build number: **{build_number}**. \n - Status: **{status}** \n - Result: **{result}**"

if queued_time > queue_time_max: # Check if this job was queued for too long

msg = f"ADO Pipeline **{name}** build number **{build_number}** was queued too long. Queued time was **{queued_time}** seconds"

if msg:

# get the logs meta info to see which log is the largest

url = f"{url_base}/{buildId}/logs"

req = urllib.request.Request(url)

req.add_header('Authorization', 'Basic ' + os.environ['ADO_AUTH'])

# Get buildIds for pipeline runs from last 1 hour

with urllib.request.urlopen(req) as response:

html = response.read()

txt_d = json.loads(html)

value_l = txt_d['value']

#{"count":11,"value":[{"lineCount":31,"createdOn":"2023-02-13T19:03:17.577Z","lastChangedOn":"2023-02-13T19:03:17.697Z","id":1...

l_ct_max = 0

log_id_err = 0

# determine log with either an error or the most lines - it differs for different pipeline jobs

for logs_d in value_l[4:]: # only consider the later logs

url = f"{url_base}/{buildId}/logs/{logs_d['id']}"

req = urllib.request.Request(url)

req.add_header('Authorization', 'Basic ' + os.environ['ADO_AUTH'])

with urllib.request.urlopen(req) as response:

html = response.read().decode('utf-8')

if re.search('error',html):

log_id_err = logs_d['id']

print('We matched the word error in log id',log_id_err)

l_ct = logs_d['lineCount']

if l_ct > l_ct_max:

l_ct_max = l_ct

log_id_all = logs_d['id']

if log_id_err > 0 and not log_id_all == log_id_err: # error over long log file when in conflict

log_id_all = log_id_err

url_all_logs = f"{url_base}/{buildId}/logs/{log_id_all}"

msg = f"{msg} \n**[Go to Log]({url_all_logs})** "

print(msg)

return msg

def sendMessageToTeams(msg: str):

"""

Send a message to a Teams Channel using webhook

"""

# my Pipeline_check webhook

webHookUrl = "https://drjohns.webhook.office.com/webhookb2/66f741-9b1e-401c-a8d3-9448d352db@ec386b-c8f-4c0-a01-740cb5ba55/IncomingWebhook/2c8e881d05caba4f484c92617/7909f-d2f-b1d-3c-4d82a54"

try:

# escaping underscores to avoid alerts in italics.

msg = msg.replace('_', '\_')

teams_msg = pymsteams.connectorcard(webHookUrl)

teams_msg.text(f'{msg}')

teams_msg.send()

except Exception as e:

print(f'failed to send alert: {str(e)}')

aux_modules.py contains most of the logic with checking each pipeline against the criteria and constructing an alert in Markdown to send to MS Teams. I’m not saying it’s beautiful code. I’m still learning. But I am saying it works.

I’ve revised the code to find the log file which is most likely to contain the “interesting” stuff. That’s usually the longest one excluding the first five or so. There are often about 10 logs available for even a minimal pipeline run. So this extra effort helps.

Then I further revised the code to fetch the logs and look for the word “error.” That may show up in the longest log or it may not. It not, that log takes precedence as the most interesting log.

check_all_pipelines.yml

# Python package

# Create and test a Python package on multiple Python versions.

# Add steps that analyze code, save the dist with the build record, publish to a PyPI-compatible index, and more:

# https://docs.microsoft.com/azure/devops/pipelines/languages/python

##trigger:

##- main

trigger: none

pool:

name: dsc-adosonar-drjohns4ServicesCoreSystems-agent

# name: visibility_agents

#strategy:

# matrix:

# Python36:

# python.version: '3.6'

steps:

#- task: UsePythonVersion@0

# inputs:

# versionSpec: '$(python.version)'

# displayName: 'Use Python $(python.version)'

- script: pip3 install -vvv --timeout 60 -r Pipeline_check/requirements.txt

displayName: 'Install requirements'

- script: python3 check_all_pipelines.py conf_check_all.ini

displayName: 'Run script'

workingDirectory: $(System.DefaultWorkingDirectory)/Pipeline_check

env:

ADO_AUTH: $(ado_auth)

PYTHONPATH: $(System.DefaultWorkingDirectory)/Pipeline_check:$(System.DefaultWorkingDirectory)

schedules:

- cron: "19 * * * *"

displayName: Run the script at 19 minutes after the hour

branches:

include:

- main

always: true

This yaml file we sort of drag around from pipeline to pipeline so some of it may not appear too optimized for this particular pipeline. But it does the job without fuss.

Success compels further development

This pipeline watcher has been so successful – and frankly I’m surprised this post isn’t more popular than it is because it gives away for free a great idea – that I decided what we really need is a second pipeline checker to do nothing other than to check the first pipeline_checker! Fortunately I had written it in such a general-purpose way that this proved to be easy. I created a second yaml file and a second config file. The second config file made the defaults to NOT check other pipeline, and had an explicit entry for pipeline_check to enable checking. Then I run it on other ADO agents available to me. And all is good. This was needed because the pipline_check job itself has stopped running twice in the last couple months due to no fault of my own.

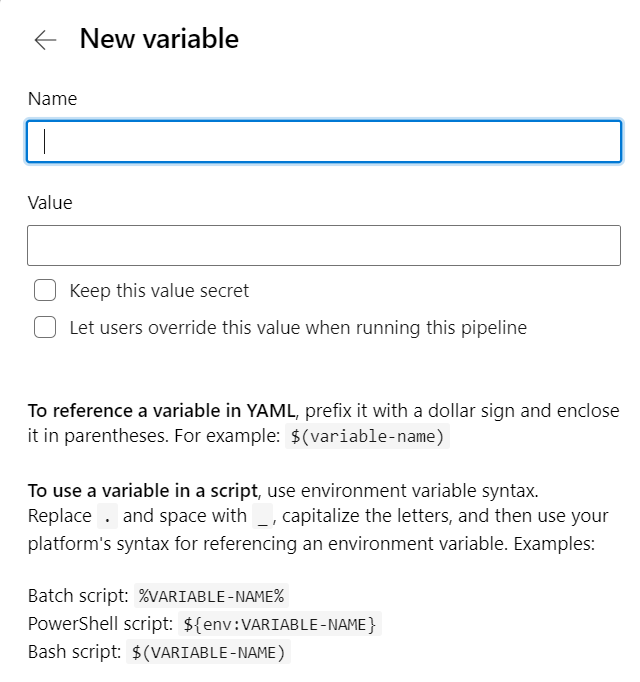

The hardest part was recalling my ADO_AUTH value, which is a secret variable. For the record It is the mime-encoded string formed from

any:{PAT}

where {PAT} is my Personal Access Token (which I had recorded). On any linux server you can mime encode by running base64. Encode is the default action. So, echo -n :{PAT}|base64 should produce the needed string. The -n argument is needed or else you may throw in an extra newline character which will definitely produce the wrong conversion!

Conclusion

My ADO pipeline checker is conveniently showing us all our pipeline runs which have failed for various reasons – takes too long, completed with errors, too few jobs have been run ni the last hour, … It sends its output to a MS Teams channel we have subscribed to, by way of a webhook we set up. So far it’s working great!

I guess(?) the right way to do such monitoring is to use the Azure Pipelines app. I did not get it to work on my first try. It’s described pretty well here.

References and related

Here’s my post on fetching the script log resulting from the pipeline run.

Microsoft’s document on personal access tokens is pretty helpful: https://learn.microsoft.com/en-us/azure/devops/organizations/accounts/use-personal-access-tokens-to-authenticate?view=azure-devops&tabs=Linux

The Azure Pipelines app is probably the professional way to accomplish the same things as my code.