2023 Intro – Windows 11 Home Edition

I just got my HP Aero with Windows 11. One of the very first things I did is to get WSL going, that’s how important it is to me. I did not do a simple wsl –install, at least not initially. Maybe it would have worked, maybe not. Instead I launched a PowerShell window as administrator and ran the two dism commands as shown below. Then this command did nothing – it’s not recognized:

wsl --set-default-version 2

Then I just went for it and tried to install Debian:

wsl --install --distribution Debian

It seemed to go through, but I remember that it always does (the fake). But all of a sudden I was being asked to set up an account. A reboot, and then a there was a Debian window. And the wsl command works. So no kernel patching needed any longer (I believe).

The section below is my original post based on my experience installing wsl on Windows 10 home edition.

Intro

I installed WSL2 on my work laptop a couple weeks ago. It didn’t go terribly smoothly but now that I have it, I love it. I had been using a Cygwin environment, but I fear that is looking a little long in the tooth. WSL2 is fast to start up. But the main contrast is that while Cygwin is an emulator, WSL2 is a true hypervisor so you get a full-fledged linux VM, right on your PC. Of course this was always possible with products like VirtualBox or whatnot, but Microsoft has sort of built in this capability with newer versions of Windows 10, so there’s no mussing with external software any longer.

But at work I have Windows 10 Professional, of course. What about at home where I have Windows 10 Home Edition like most of us? My understanding is that you could not run a hypervisor with Windows 10 Home Edition. And I was probably right, until recently. But now you can. I know because I just managed it tonight.

None of the tutorials out there were exactly right, but they all contained pieces of the truth. So my contribution is to add weight to the correct steps you’ll need to take. Unfortunately I only get to do it once so my notes aren’t the best. Still, I may be able to spare you some pitfalls.

Why you should want WSL2

If you love linux command-line, then I would say this is a must-have.

What doesn’t work

You’ll see suggestions to fire up powershell and simply run

wsl –install

Chances are about 95% that that won’t work if you are reading this article – would that it would be so simple.

Instead, do this

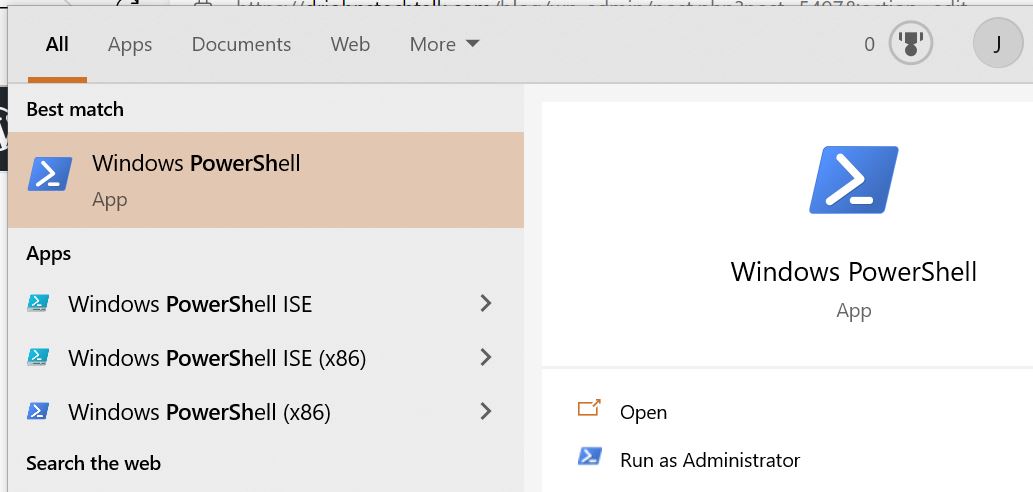

Open a powershell window as administrator. To do that type powershell in the start menu, and look around at all the options. Pick out the one that mentions Run as administrator.

Then enter this command into the PS window.

dism.exe /online /enable-feature /featurename:Microsoft-Windows-Subsystem-Linux /all /norestartThen this.

dism.exe /online /enable-feature /featurename:VirtualMachinePlatform /all /norestartThen this.

wsl --set-default-version 2

You need to update your kernel. Download this WSL2 kernel update file and install it: https://wslstorestorage.blob.core.windows.net/wslblob/wsl_update_x64.msi

A reboot at this point is probably a good idea.

Now you need to get yourself a linux distro to install.

There are certain wsl commands you can issue which will helpfully give you the URL to the Debian distro: You put the URL into the browser and it redirects you to the MS Store. But I forget what that is. perhaps wsl -d Debian. But I suppose you can simply go to the MS Store directly and search for Debian and install it.

Nameserver issues when using vpn

Actually when I switched from wsl v 1 to wsl v 2, name resolution really didn’t work at all. The proximate cause is that the /etc/resolv.conf file contained the IP of the host system. But the host system doesn’t have a dns server… So after considering other options, I think the best is to embrace this guy’s script. It is supposed to dynamically figure out the best nameservers, which is pretty cool: https://github.com/jacob-pro/wsl2-dns-agent

He writes:

(Optionally) save it to your startup folder (%APPDATA%\Microsoft\Windows\Start Menu\Programs\Startup), so it is automatically launched when you log in.

So I guess that’s the current way to run a custom program upon startup. That could be useful.

Well, that approach hasn’t been working so well for me. For now I am updating /etc/resolv.conf by hand. First I break the symlink then I run chattr -i resolv.conf to bypass the warning that this is a read-only file and finally I edit it by hand. I know. Crude. But it works.

Enhance your experience with Windows Terminal

One good suggestion out of Windows Central is to use Windows Terminal. At least it looks good. I haven’t had time to try it myself. I normally just fire up a CMD window and type wsl. My Debian starts immediately and I have a satisfactory command-line environment. But working with multiple windows will be nice so I have to check it out.

Just look for Windows Terminal in the MS Store.

Windows Central suggestions

A web site called Windows Central has a pretty good stepwise guide. But their advertising is so obnoxious, I’m afraid to accidentally touch any part of the page for fear of getting sent to one of their many advertisers. Even still it probably happened about five times. So I won’t make the link to them too prominent. And, anyway, their guide is a little oversimplified.

My equipment

I have a four-year old HP Pavilion laptop running Windows 10 2021 H2 if I remember correctly. It has solid state drives so it’s not too slow, and it boots pretty quickly.

BIOS – basically impossible to get into these days

I’m sure people who do this for a living will disagree, but for ordinary people it’s basically impossible to disrupt the boot process to modify the BIOS settings. And you may need to do that. In fact that was the hardest thing of all for me. Pressing F10 or delete or Escape or F2 – and does that mean hold the FN key down first?? No one explains that, and I don’t have patience to watch a YouTube video. But after trying a bunch of combinations and booting a bunch of times, and never getting into the BIOS settings, I was really glad to learn Windows 10 offers an alternate way! And it works…

Access BIOS settings from Windows 10

Very briefly, the steps are:

Windows Settings > Update & Security > Recovery > Restart Now > Advanced Startup -> Restart Now > Reboot > select Troubleshoot > Advanced Options > UEFI Firmware Settings > (BIOS menu) enable virtualization > Save.

To see the details, go to this HP article: How to Enter BIOS Setup on Windows PCs | HP® Tech Takes

Why you may need to alter the BIOS settings

Well, on my laptop my installation of Debian kept failing with this error. Error: 0x80370102 The virtual machine could not be started because a required feature is not installed. I read on a Microsoft site that could be because the ability to run virtual servers was not enabled in the BIOS. And, yes, that turned out to be absolutely true. It was disabled. So I enabled it and bam, the Debian install started asking me for a username and password, and I was running a Debian VM!

To be fleshed out as my time permits…

But, I love my Debian linux. It’s just like Raspberry Pi OS Lite. I just install packages as I need them: python, pip, curl, bind9-dnsutils, ssh, etc.

Operating inbound TCP services

After the initial thrill wears off, you realize you may need practical things that you have on your Raspberry Pi such as an ssh server or a web server. I believe this will be possible. Still working on it. After installing ssh you can fire it up:

$ sudo service ssh start

This post describes some of those service commands which you have under a WSL linux install: [3 Fixes] System Has Not Been Booted With Systemd as Init System (partitionwizard.com)

If you ignore that article you may see this error! System has not been booted with systemd as init system (PID 1). Can’t operate.

Back to your ssh server. Now you can already connect to it from the Windows system itself, e.g., from a CMD window:

C:\Users\me> ssh user@localhost

user is the Debian user you set during initial setup. So, anyway, that works and that’s cool. But you’re still locked out from the outside.

This helpful Microsoft article discusses networking for WSL2. Apparently it is still evolving and so it’s a bit primitive right now: Accessing network applications with WSL | Microsoft Docs

From a CMD Window launched as administrator:

netsh interface portproxy add v4tov4 listenport=22 listenaddress=0.0.0.0 connectport=22 connectaddress=172.22.167.12

But this does not work in my case. Firewall thing, I’m sure. Yes! for me, where I also run Mcafee, I needed to go to their firewall settings > Ports and system services. Then I had to add a service for TCP port 22 – the ssh default port. Then it began to work and my RPi could ssh and sftp to my Debian VM! sftp kind of hanged a bit. Have to see how bad that is.

How the filesystems are mapped

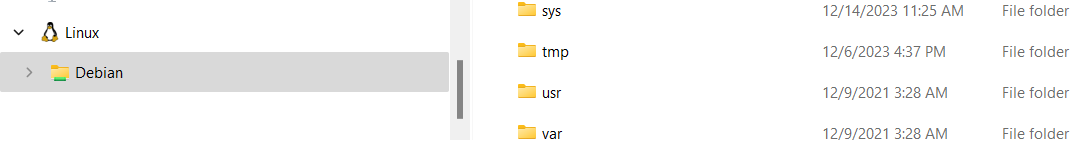

Where are your nice, tidy linux directories to be found on your ugly File Explorer? You should have a Linux > Debian (or whatever your installed distribution is) section added to the bottom of your File Explorer.

But really, where is that? For me, it is:

C:\Users\USERNAME\AppData\Local\Packages\TheDebianProject.DebianGNULinux_76v4gfsz19hv4\LocalState\rootfs\home\…

And turning things around, how do you navigate to the C drive from your linux command line? Just

cd /mnt/c

Most of the interesting files in my case are in /mnt/c/user/USERNAME

Debian linux loses time

Older versions of WSL may have their system clock drift severely compared to the underlying system’s hardware clock. sudo hwclock -s will probably restore time to the correct time. Also see https://stackoverflow.com/questions/65086856/wsl2-rest-api-error-due-to-wsl2-clock-out-of-sync-with-windows-clock

Windows 11 experience with installation

For my new Windows 11 laptop, I simply followed the instructions at How to Install WSL2 on Windows 11: A Step-by-Step Guide for Beginners – Solve Your Tech and I was up and running with Ubuntu v 24 noble in minutes. I did not need to pick out a linux distribution from the app store, it just gave me Ubuntu by default which is perfectly fine with me.

References and related

For Windows 11 I followed the simple steps in this web site: How to Install WSL2 on Windows 11: A Step-by-Step Guide for Beginners – Solve Your Tech

That obnoxious Windows Central article I mentioned above with a lot of the WSL2 installation information. It’s a veritable minefield of links to irrelevant stuff, so you’ve been warned: How to install Linux WSL2 on Windows 10 and Windows 11 | Windows Central

Seeing Error: 0x80370102? Try Troubleshooting Windows Subsystem for Linux | Microsoft Docs A whole host of other WSL2 errors are addressed in this article as well.

This article purports to be for servers, but I think it’s applicable to PCs as well. It gets pretty technical. System requirements for Hyper-V on Windows Server | Microsoft Docs

About starting system services such as the ssh daemon: [3 Fixes] System Has Not Been Booted With Systemd as Init System (partitionwizard.com)

A good overview of WSL2 networking: Accessing network applications with WSL | Microsoft Docs

How to Enter BIOS Setup on Windows PCs | HP® Tech Takes

With WSL 2 dns name resolution can often be mucked up. This guy has a nice fix: https://github.com/jacob-pro/wsl2-dns-agent

Info about a clock drift problem: https://stackoverflow.com/questions/65086856/wsl2-rest-api-error-due-to-wsl2-clock-out-of-sync-with-windows-clock