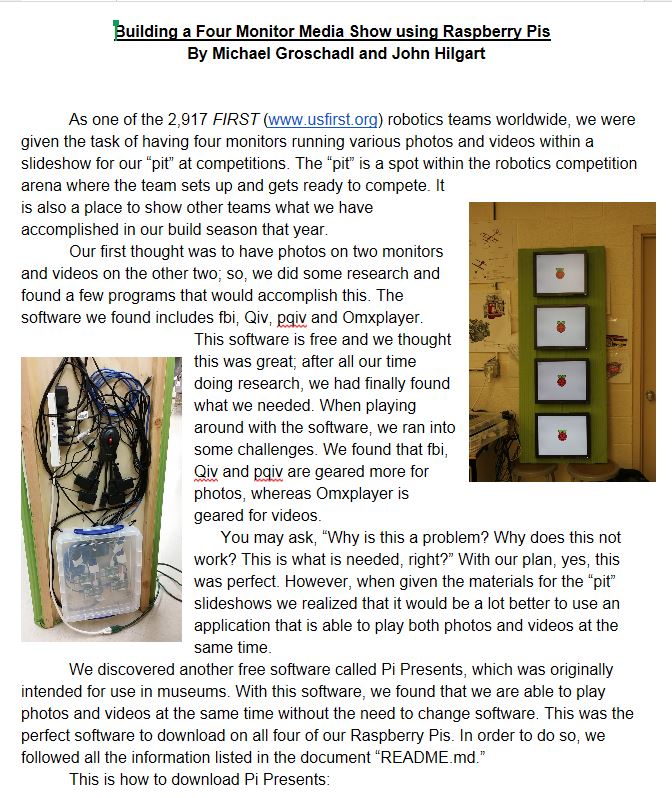

Intro

The idea is that for a display kiosk let’s have a Raspberry Pi drive a display like one of those electronic picture frames. Power the thing up, perhaps plug in a flash drive, leave off the mouse and keyboard, but have a display attached, and get it to where it just automatically starts a slideshow without more fuss.

Some discarded options

Obviously this is not breaking new ground. you can find many variants of this on the Internet. An early-on approach that caught my eye is flickrframe. I read the source code to learn that at the end of the day it relies on the fbi program (frame buffer imageviewer). I thought that perhaps I could rip out the part that connects to Flickr but it seemed like too much trouble. At the end of the day it’s just a question of whether to use fbi or not.

Then there’s Raspberry Pi slideshow. That’s a quite good write-up. That’s using pqiv. I think that solution is workable.

But the one I’m focusing on uses qiv. You would have thought that pqiv would rely on qiv (quick image viewer) but it appears not to. So qiv is a separate install. qiv has lots of switches so it’s been written with this kind of thing in mind it seems.

What it looks like so far

#!/bin/sh

# -f : full-screen; -R : disable deletion; -s : slideshow; -d : delay <secs>; -i : status-bar;

# -m : zoom; [-r : ranomdize]

# this doesn't handle filenames with spaces:

##cd /media; qiv -f -R -s -d 5 -i -m `find /media -regex ".+\.jpe?g$"`

# this one does:

if [ "$1" = "l" ]; then

# print out proposed filenames

cd /media; find . -regex ".+\.jpe?g$"

else

sleep 5

cd /media; find . -regex ".+\.jpe?g$" -print0|xargs -0 qiv -f -R -s -d 5 -i -m

fi |

#!/bin/sh

# -f : full-screen; -R : disable deletion; -s : slideshow; -d : delay <secs>; -i : status-bar;

# -m : zoom; [-r : ranomdize]

# this doesn't handle filenames with spaces:

##cd /media; qiv -f -R -s -d 5 -i -m `find /media -regex ".+\.jpe?g$"`

# this one does:

if [ "$1" = "l" ]; then

# print out proposed filenames

cd /media; find . -regex ".+\.jpe?g$"

else

sleep 5

cd /media; find . -regex ".+\.jpe?g$" -print0|xargs -0 qiv -f -R -s -d 5 -i -m

fi

The idea being, why not make a slideshow out of all the pictures found on a flash drive that’s been inserted into the Pi? That’s how a standard picture frame works after all. It’s a very convenient way to work with it. That’s the aim of the above script.

Requirements update

OK. Well this happens a lot in IT. We thought we were solving one problem but when we finally spoke with the visual arts team they had something entirely different in mind. They want to mix in movies as well. fbi, pqiv or qiv don’t handle movies. I have mplayer and vlc from my playing around with Raspberry Pi camera. mplayer runs like a dog on the movie files I tried, perhaps one frame update every two seconds. After more searching around I came across omxplayer. That actually works pretty well. It on the other hand doesn’t seem up to the task of handling a mixed multimedia stream of stills and movies. But it did handle the two movies types we had: .mov and .mp4 movie files. omxplayer is written specifically for the Pi so it uses its GPU for frame acceleration. mplayer just seems to rely on the CPU which just can’t keep up on a high-def quality movie. So as a result omxplayer will only play through a true graphical console. It doesn’t even bother you to get your DISPLAY environment variable set up correctly – it’s just going to send everything to the head display.

Overheard recently

And when using my TV as display omxplayer put out the sound, too, perfectly synchronized and of high quality.

I was thinking if we should kludge stitching together qiv and omxplayer. You know letting one lapse and starting up the other to transition from a still to a movie, but I don’t know how to make the transition smooth. So i searched around yet some more and found pipresents. I believe it is a python framework around omxplayer. It’s pretty sophisticated and yet free. It’s actually aimed at museums and can include reactions to pressed buttons as you have at museum displays. So far we got the example media show to loop through – it demonstrates a high-quality short movie and a still plus some captions at the beginning.

Pipresents isn’t perfect however

I quickly found some problems with pipresents so I went the official route and posted them to the github site, not really knowing what to expect. The first issue is that you are not allowed to import .mov files! That makes no sense since omxplayer plays them. So I post this bug and that very same day the author emails me back and explains that you simply edit pp_editor.py line 32 and add .mov as an additional video file type! Sure enough, that did it. Then I found that it wasn’t downsampling my images. These days everyone has a camera or phone that takes mmulti-megapixel images far exceeding a cheap display’s 1280×1024 resolution. So you only see a small portion of your jpeg. I just assumed pipresents would downsample these large pictures because the other packages like qiv do it so readily. Again the same day the author gets back to me and says no this isn’t supported – in pipresents. But there is a solution: I should use pipresents-next! It’s officially in beta but just about ready for production release. I don’t think I’ll go that route but it’s always nice to know your package continues to be developed. I’ve written my own downsampler which I will provide later on.

Screen turns off

The pipresents has a command-line switch, -b, to prevent screen blanking. But I think in general it’s better to not use that switch and instead disable screen blanking in general.

$ sudo nano /etc/kbd/config

– change BLANK_TIME=30 to BLANK-TIME=0

– and change POWERDOWN_TIME=30 to POWERDOWN_TIME=0

$ sudo nano /etc/lightdm/lightdm.conf

– below the [SeatDefault] line create this line:

xserver-command=X -s 0 dpms

How to get started with PiPresents

$ wget https://github.com/KenT2/pipresents/tarball/master -O – | tar xz

There should now be a directory ‘KenT2-pipresents-xxxx’ in your home directory. Rename the directory to pipresents:

$ mv KenT2* pipresents

To save time make sure you have two terminal windows open on your Pi and familiarize yourself with how to cut and paste text between them. Then from the one window you can:

$ cd pipresents; more README.md

while you execute the commands you’ve cut and paste from that window into the other, e.g.,

$ sudo apt-get install python-imaging

etc.

What happens if you forget to install the unclutter package

Not much. It’s just that you will see a mouse pointer in the center of the screen which won’t go away, which is not desirable for black box operation.

Python image downsizing program

This is also known as downsampling. Amazingly, you really don’t find a simple example program like this when you do an Internet search, at least not amongst the first few hits. I needed a program to reduce the large images to the size of the display while preserving the aspect ratio. My display, a run-of-the-mill Acer v173, is 1280 x 1024 pixels. Pretty standard stuff, right? yet the Pi sees it as 1232 x 992 pixels! Whoever would have thought that possible? And with no possible option to change that (at least from the GUI). So just put in the appropriate values for your display. This program just handles one single image file. also note that if it’s a small picture, meaning smaller than the display, it will be blown up to full screen and hence will make a thumbnail image look pixelated. The match doesn’t distinguish small from large images but I fel that is fine for the most part. So without further chatting, here it is. I called it resize3.py:

import Image

import sys

# DrJ 2/2015

# somewhat inspired by http://www.riisen.dk/dop/pil.html

# image file should be provided as argument

# Designed for Acer v173 display which the Pi sees as a strange 1232 x 992 pixel display

# though it really is 1 more run-of-the-mill 1280 x 1024

imageFile = sys.argv[1]

im1 = Image.open(imageFile)

def imgResize(im):

# Our display as seen by the Pi is a strange 1232 x 992 pixels

width = im.size[0]

height = im.size[1]

# If the aspect ratio is wider than the display screen's aspect ratio,

# constrain the width to the display's full width

if width/float(height) > 1232.0/992.0:

widthn = 1232

heightn = int(height*1232.0/width)

else:

heightn = 992

widthn = int(width*992.0/height)

im5 = im.resize((widthn, heightn), Image.ANTIALIAS) # best down-sizing filter

im5.save("resize/" + imageFile)

imgResize(im1) |

import Image

import sys

# DrJ 2/2015

# somewhat inspired by http://www.riisen.dk/dop/pil.html

# image file should be provided as argument

# Designed for Acer v173 display which the Pi sees as a strange 1232 x 992 pixel display

# though it really is 1 more run-of-the-mill 1280 x 1024

imageFile = sys.argv[1]

im1 = Image.open(imageFile)

def imgResize(im):

# Our display as seen by the Pi is a strange 1232 x 992 pixels

width = im.size[0]

height = im.size[1]

# If the aspect ratio is wider than the display screen's aspect ratio,

# constrain the width to the display's full width

if width/float(height) > 1232.0/992.0:

widthn = 1232

heightn = int(height*1232.0/width)

else:

heightn = 992

widthn = int(width*992.0/height)

im5 = im.resize((widthn, heightn), Image.ANTIALIAS) # best down-sizing filter

im5.save("resize/" + imageFile)

imgResize(im1)

As I am not proficient in python I designed the above program to minimize file handling. That I do in a shell script which was much easier for me to write. Together they can easily handle downsampling all the image files in a particular directory. I call this script reduce.sh:

#!/bin/sh

echo "Look for the downsampled images in a sub-directory called resize

echo "JPEGs GIFs and PNGs are looked at in the current directory

mkdir resize 2>/dev/null

ls -1 *jpg *jpeg *JPG *png *PNG *gif *GIF 2>/dev/null|while read file; do

echo downsampling $file

# downsample the image file

python ~/resize3.py "$file"

done |

#!/bin/sh

echo "Look for the downsampled images in a sub-directory called resize

echo "JPEGs GIFs and PNGs are looked at in the current directory

mkdir resize 2>/dev/null

ls -1 *jpg *jpeg *JPG *png *PNG *gif *GIF 2>/dev/null|while read file; do

echo downsampling $file

# downsample the image file

python ~/resize3.py "$file"

done

Stopping the slideshow

Sometimes you just need to stop the thing and that’s not so easy when you’ve got it in blackbox mode and running at startup.

If you’re lucky enough to have a keyboard attached to the Pi we found that

<Alt> F4

from the keyboard stops it.

No keyboard? We assigned Our Pi a static IP address and leave an ethernet cable attached to it. Then we put a PC on the same subnet and ssh to it, e.g., using putty or teraterm. Then we run this simple kill script, which I call kill.sh:

#!/bin/sh

pkill -f pipresents.py

pkill omxplayer |

#!/bin/sh

pkill -f pipresents.py

pkill omxplayer

Digital photo frame projects morphs to museum-style kiosk display

At times I was tempted to throw out this pipresents software but we persisted. It has a different emphasis from a digital photo frame where you plug in a USB stick and don’t care about the order the pictures are presented to you. pipresents is oriented towards museums and hence is all about curated displays, where you’ve pored over the presentation order and selected your mix of videos and images. And in the end that better matched our requirements.

The manual is wanting for clarity

It’s nice that a PDF manual is included, but it’s a pain to read it to extract the small bits of information you actually need. Here’s what you mostly need to know. An unattended slideshow mixture of images and videos is what he calls a mediashow. Make your own profile to hold your mediashow:

$ cd pipresents; python pp_editor.py

This brings up a graphical editor. Then follow these menus:

Profile|New from template|Mediashow

Choose a short easy-to-type name such as drjmedia.

Click on media.json and then you can start adding images and movies. These are known as “tracks.”

Remove the example track.

Add your own images and movies.

Do a Profile|Validate

There is no Save! Just kill it.

And to run it full screen from your home directory:

$ python pipresents/pipresents -ftop -p drjmedia

Autostarting your mediashow

The instructions provided in the manual.pdf worked on my older Pi, but not on the B+ model Pis. So to repeat it here, modifying it so that it is more correct (the author doesn’t seem comfortable with Linux). Manual.pdf has:

$ mkdir -p ~/.config/lxsession/LXDE

$ cd !$; echo "python pipresents/pipresents.py -ftop -pdrjmedia" > autostart

$ chmod +x autostart

And as I say this worked on my model B Pi, but not my B+. The following discussion about autostarting programs is specific to operating systems which use the LXDE desktop environment such as Raspbian. On the B+ this fairly different approach worked to get the media show automatically starting upon boot:

$ cd /etc/xdg/autostart

Create a file pipresents.desktop with these lines:

[Desktop Entry]

Type=Application

Name=pipresents

Exec=python pipresents/pipresents.py -ftop -pdrjmedia

Terminal=true |

[Desktop Entry]

Type=Application

Name=pipresents

Exec=python pipresents/pipresents.py -ftop -pdrjmedia

Terminal=true

But I recommend this approach which also works:

$ mkdir ~/.config/autostart

Place a pipresents.desktop file in this directory with the contents shown above.

More sophisticated approach for better black box operations

We find it convenient to run pp_editor in a virtual display created by vnc. Then we still don’t need to attach keyboard or mouse to the Pi. But the problem is that pipresents will also launch in the vnc session and really slow things down. This is a solution I worked out to have only one instance of pipresents run, even if others X sessions are launched on other displays. Note that this is a general solution and applies to any autostarted program.

The main idea is to test in a simple shell script if our display is the console (:0.0) or not.

I should interject I haven’t actually tested this but I think it’s going to work! Update: Yes, it did work!

Put startpipresents.sh in /home/pi with these contents:

#!/bin/bash

# DISPLAY environment variable is :0.0 for the console display

echo $DISPLAY|grep :0 > /dev/null 2>&1

if [ "$?" == "0" ]; then

# matched. start pipresents in this xsession, but not any other one

python pipresents/pipresents.py -ftop -pdrjmedia

fi |

#!/bin/bash

# DISPLAY environment variable is :0.0 for the console display

echo $DISPLAY|grep :0 > /dev/null 2>&1

if [ "$?" == "0" ]; then

# matched. start pipresents in this xsession, but not any other one

python pipresents/pipresents.py -ftop -pdrjmedia

fi

Then pipresents.desktop becomes this:

[Desktop Entry]

Type=Application

Name=pipresents

Exec=/home/pi/startpipresents.sh

Terminal=true |

[Desktop Entry]

Type=Application

Name=pipresents

Exec=/home/pi/startpipresents.sh

Terminal=true

To install the vnc server:

$ sudo apt-get install tightvncserver

And to auto-launch it make a vnc.desktop file in ~/.config/autostart like this:

[Desktop Entry]

Type=Application

Name=vncserver

Exec=/home/pi/startvncserver.sh

Terminal=false |

[Desktop Entry]

Type=Application

Name=vncserver

Exec=/home/pi/startvncserver.sh

Terminal=false

and put this in the file /home/pi/startvncserver.sh:

#!/bin/bash

# DISPLAY environment variable is :0.0 for the console display

echo $DISPLAY|grep :0 > /dev/null 2>&1

if [ "$?" == "0" ]; then

# matched. start vncserver in this xsession, but not any other one

vncserver

fi |

#!/bin/bash

# DISPLAY environment variable is :0.0 for the console display

echo $DISPLAY|grep :0 > /dev/null 2>&1

if [ "$?" == "0" ]; then

# matched. start vncserver in this xsession, but not any other one

vncserver

fi

You need to launch vncserver by hand once to establish the password.

And we may as well pre-launch the pp_editor because we’re likely to need that. So make a file in the home directory called startppeditor.sh with these contents:

#!/bin/bash

# DISPLAY environment variable is :1.0 for the vnc display

echo $DISPLAY|grep :1 > /dev/null 2>&1

if [ "$?" == "0" ]; then

# matched. start ppeditor in this xsession, but not any other one

python pipresents/pp_editor.py

fi |

#!/bin/bash

# DISPLAY environment variable is :1.0 for the vnc display

echo $DISPLAY|grep :1 > /dev/null 2>&1

if [ "$?" == "0" ]; then

# matched. start ppeditor in this xsession, but not any other one

python pipresents/pp_editor.py

fi

and in ~/.config/autostart a file called ppeditor.desktop with these contents:

[Desktop Entry]

Type=Application

Name=ppeditor

Exec=/home/pi/startppeditor.sh

Terminal=true |

[Desktop Entry]

Type=Application

Name=ppeditor

Exec=/home/pi/startppeditor.sh

Terminal=true

Similarly we can pre-launch an lxterminal because we’ll probably need one of those. Here’s an example startlxterminal.sh:

#!/bin/bash

# DISPLAY environment variable is :1.0 for the vnc display

echo $DISPLAY|grep :1 > /dev/null 2>&1

if [ "$?" == "0" ]; then

# matched. start a large lxterminal in this xsession, but not any other one

lxterminal --geometry=100x40

fi |

#!/bin/bash

# DISPLAY environment variable is :1.0 for the vnc display

echo $DISPLAY|grep :1 > /dev/null 2>&1

if [ "$?" == "0" ]; then

# matched. start a large lxterminal in this xsession, but not any other one

lxterminal --geometry=100x40

fi

and the autostart file:

[Desktop Entry]

Type=Application

Name=lxterminal

Exec=/home/pi/startlxterminal.sh

Terminal=true |

[Desktop Entry]

Type=Application

Name=lxterminal

Exec=/home/pi/startlxterminal.sh

Terminal=true

A note about Powerpoint slides

With a Macbook we were able to read in a Powerpoint slideshow and export it to JPEG images, one image per slide. That was pretty convenient. We have done the same directly from Microsoft Powerpoint – it’s a save option.

A note about Mpeg4 videos

Some videos overwhelm these older Pis that we use. Maybe on the Pi 3 they’d be OK? A creative student would hand us his 2 minute movie in mpeg4 format. The Pi would never be able to display it. We learned you can reduce the resolution to get the Pi to display it. A student was doing this on his Macbook, but when he left i had to figure out a way.

The original mpeg4 video had resolution of 1920 x 1080. I wanted to have horizontal resolution of no more than 1232, but maybe even smaller, while preserving the aspect ratio (widescreen format).

I used good ole’ Microsoft Movie Maker. I don’t think it’s available any longer except from dodgy sites, but in the days of Windows 7 you could get it for free through Windows Live Update. Then, if you upgraded that Windows 7 PC to Windows 10, it allowed you to keep Movie Maker. That’s the only way I know of. Not that it’s a good program. It’s not. Very basic. But it does permit resizing a video stream to custom resolution, so I have to give it that. I tried various resolutions nd played them back. i finally settled on the smallest I tried: 800×450. In fact I couldn’t really tell the difference in video quality between all the samples. And of corse 800×450 made for the smallest file. So we took that one. Fortunately, pipresents blew it up to occupy the full screen width (1232 pixels) while preserving the aspect ratio. So it looks great and no further action was needed.

The sound of silence

You want the video sound to come out the stereo mini-jack because you’re not using an HDMI monitor? PiPresents tries to send audio out through HDMI by default so you won’t hear the sounds if you have a VGA monitor. But you can change that. If you want to do this in raw omxplayer the switch which sends the sound out through the mini-jack is:

In pipresents this option is available in the pp_editor. It’s a property of the profile. So you edit the profile, look for omx-audio, and change its value in the drop-down box from hdmi to local. That’s it!

A word about DHCP

We use a PC to connect to the four Pis. They are connected to a hub and there is an Ethernet cable connected to the hub and ready to be connected to a PC with an Ethernet port. The Pis all have private IP addresses: 10.31.42.1, 10.31.42.2, 10.31.42.3 and 10.31.42.4. For convenience, we set up a DHCP server on Pi 1 so that when the PC connects, it gets assigned an IP address on that subnet. DHCP is a service that dynamically assigns IP addresses. Turns out this is dead easy. You simply install dnsmasq (sudo apt-get install dnsmasq) and make sure it is enabled. That’s it! More sophisticated setups require modification of the file /etc/dnsmasq.conf, but for our simple use case that is not even needed – it just picks reasonable values and assigns an appropriate IP to the laptop that allows it to communicate to any of the four Pis.

References and related

I worked on this project with a student. Building a Four Monitor Media Show using Raspberry Pis

Pipresents has its own wordpress site.

LXDE has its own official site.

Read about a first look at the custom-built 7″ Raspberry Pi touch display in this blog post.

An alternative slideshow program to pipresents is to leverage qiv. I put something together and demo it in this post, but with a twist: I pull all the photos from my own Google Drive, where I store 40,000+ pictures!