Intro

It’s been awhile since I’ve had the opportunity to relatean IT mystery. After awhile they are repates of what’s already happened in the past, or it’s too complex to relate, or I was only peripherally involved. But today I came across a good one. It falls into the never been seen before category.

The details

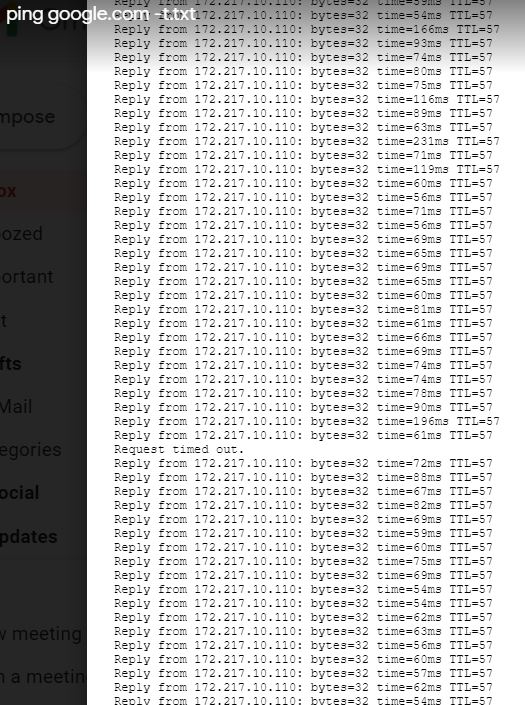

A web server behind my web application firewall became unreachable. In the browser they get a message This site can’t be reached. The app owners came to me looking for input. I checked the WAF and it was fine. The virtual server was looking healthy. So I took a packet trace, something to this effect:

$ tcpdump -nni 0.0 host 192.168.2.124

14:00:45.180349 IP 192.68.1.13.42045 > 192.68.2.124.443: Flags [S], seq 1106553901, win 23360, options [mss 1460,sackOK,TS val 3715803515 ecr 0], length 0 out slot1/tmm3 lis=/Common/was90extqa.drjohn.com.app/was90extqa.drjohn.com_vs port=0.53 trunk=

14:00:45.181081 IP 192.68.2.124 > 192.68.1.13: ICMP host 192.68.2.124 unreachable - admin prohibited filter, length 64 in slot1/tmm2 lis= port=0.47 trunk=

14:00:45.181239 IP 192.68.1.13.42045 > 192.68.2.124.443: Flags [R.], seq 1106553902, ack 0, win 0, length 0 out slot1/tmm3 lis=/Common/was90extqa.drjohn.com.app/was9

0extqa.drjohn.com port=0.53 trunk=

I’ve never seen that before, ICMP host 192.68.2.124 unreachable – admin prohibited filter. But I know ICMP can be used to relay out-of-band routing information on occasion, though I do not see it often. I suspect it is a BAD THING and forces the connection to be shut down. Question is, where was it coming from?

The communication is via a firewall so I check the firewall. I see a little more traffic so I narrow the filter down:

$ tcpdump -nni 0.0 host 192.168.2.124 host 443

And then I only see the initial SYN packet followed by the RST – from the same source IP! So since I didn’t see the bad ICMP packet on the firewall, but I do see it on the WAF, I preliminarily conclude the problem exists on the WAF.

Rookie mistake! Did you fall for it? So very, very often, in the heat of debugging, we invent some unit test which we’ve never done before, and we have to be satisified with the uncertainty in the testing method and hope to find a control test somehow, somewhere to validate our new unit test.

Although I very commonly do compound filters, in this case it makes no sense, as I realized a few minutes later. My port 443 filter would of course exclude logging the bad ICMP packets because ICMP does not use tcp port 443! So I took that out and re-run it. Yup. bad ICMP packet still present on the firewall, even on the interface of the firewall directly connected to the server.

So at this point I have proven to my satisfaction that this packet, which is ruining the communication, really comes frmo the server.

What the server guys say

Server support is outsourced. The vendor replies

As far as the patching activities go , there is nothing changed to the server except distro upgrading from 15.2 to 15.3. no other configs were changed. This is a regular procedure executed on almost all 15.2 servers in your environment. No other complains received so far…

So, the usual It’s not us, look somewhere else. So the app owner asks me for further guidance. I find it’s helpful to create a test that will convince the other party of the error with their service. And what is one test I would have liked to have seen but didn’t cnoduct? A packet trace on the server itself. So I write

I would suggest they (or you) do a packet trace on the server itself to prove to themselves that this server is not behaving ini an acceptable way, network-wise, if they see that same ICMP packet which I see.

The resolution

This kind of thing can often come to a stand-off, or many days can be wasted as an issue gets escalated to sufficiently competent technicians. In this case it wasn’t so bad. A few hours later the app owners write and mention that the home-grown local firewall seemed suspect to them. They dsabled it and this traffic began to work.

They are reaching out to the vendor to understand what may have happened.

Case: closed!

Conclusion

An IT mystery was resolved today – something we’ve never seen but were able to diagnose and overcome. We learned it’s sometimes a good thing to throw a wider net when seeing unexpected reset packets because maybe just maybe there is an ICMP host unreachable packet somewhere in the mix.

Most firewalls would just drop packets and you wait for a timeout. But this was a homegrown firewall running on SLES 15. So it abides by its own ways of working, I guess. So because of the RST, your connection closes quickly, not timing out as with a normal network firewall.

As always, one has to maintain an open mind as to the true source of an issue. What was working yesterday does not today. No one admits to changing anything. Finding clever ad hoc unit tests is the way forward, and don’t forget to validate the ad hoc test. We use curl a lot for these kinds of tests. A browser is a complex beast and too much of a black box.