Intro

Did you ever want to learn about a domain registration but were put off by the hard sell tactics that basically all web-based whois searches subject you to? Me, too. Here’s what you can do.

The details

Linux – so that includes you, Raspberry Pi owners – has a little utility called whois which you can use to get the registrant information of a domain, e.g.,

$ whois johnstechtalk.com

Domain Name: JOHNSTECHTALK.COM

Registry Domain ID: 1795918838_DOMAIN_COM-VRSN

Registrar WHOIS Server: whois.godaddy.com

Registrar URL: http://www.godaddy.com

Updated Date: 2017-03-27T00:52:51Z

Creation Date: 2013-04-23T00:54:17Z

Registry Expiry Date: 2019-04-23T00:54:17Z

Registrar: GoDaddy.com, LLC

Registrar IANA ID: 146

Registrar Abuse Contact Email: abuse@godaddy.com

Registrar Abuse Contact Phone: 480-624-2505

Domain Status: clientDeleteProhibited https://icann.org/epp#clientDeleteProhibited

Domain Status: clientRenewProhibited https://icann.org/epp#clientRenewProhibited

Domain Status: clientTransferProhibited https://icann.org/epp#clientTransferProhibited

Domain Status: clientUpdateProhibited https://icann.org/epp#clientUpdateProhibited

Name Server: NS45.DOMAINCONTROL.COM

Name Server: NS46.DOMAINCONTROL.COM

DNSSEC: unsigned

URL of the ICANN Whois Inaccuracy Complaint Form: https://www.icann.org/wicf/

>>> Last update of whois database: 2018-04-19T19:59:35Z <<<

... |

Domain Name: JOHNSTECHTALK.COM

Registry Domain ID: 1795918838_DOMAIN_COM-VRSN

Registrar WHOIS Server: whois.godaddy.com

Registrar URL: http://www.godaddy.com

Updated Date: 2017-03-27T00:52:51Z

Creation Date: 2013-04-23T00:54:17Z

Registry Expiry Date: 2019-04-23T00:54:17Z

Registrar: GoDaddy.com, LLC

Registrar IANA ID: 146

Registrar Abuse Contact Email: abuse@godaddy.com

Registrar Abuse Contact Phone: 480-624-2505

Domain Status: clientDeleteProhibited https://icann.org/epp#clientDeleteProhibited

Domain Status: clientRenewProhibited https://icann.org/epp#clientRenewProhibited

Domain Status: clientTransferProhibited https://icann.org/epp#clientTransferProhibited

Domain Status: clientUpdateProhibited https://icann.org/epp#clientUpdateProhibited

Name Server: NS45.DOMAINCONTROL.COM

Name Server: NS46.DOMAINCONTROL.COM

DNSSEC: unsigned

URL of the ICANN Whois Inaccuracy Complaint Form: https://www.icann.org/wicf/

>>> Last update of whois database: 2018-04-19T19:59:35Z <<<

...

Admittedly that did not tell us much, but it points us to another whois server we can try, whois.godaddy.com. So try that:

$ whois ‐h whois.godaddy.com johnstechtalk.com

Domain Name: JOHNSTECHTALK.COM

Registry Domain ID: 1795918838_DOMAIN_COM-VRSN

Registrar WHOIS Server: whois.godaddy.com

Registrar URL: http://www.godaddy.com

Updated Date: 2017-03-27T00:52:50Z

Creation Date: 2013-04-23T00:54:17Z

Registrar Registration Expiration Date: 2019-04-23T00:54:17Z

Registrar: GoDaddy.com, LLC

Registrar IANA ID: 146

Registrar Abuse Contact Email: abuse@godaddy.com

Registrar Abuse Contact Phone: +1.4806242505

Domain Status: clientTransferProhibited http://www.icann.org/epp#clientTransferProhibited

Domain Status: clientUpdateProhibited http://www.icann.org/epp#clientUpdateProhibited

Domain Status: clientRenewProhibited http://www.icann.org/epp#clientRenewProhibited

Domain Status: clientDeleteProhibited http://www.icann.org/epp#clientDeleteProhibited

Registry Registrant ID: Not Available From Registry

Registrant Name: ******** ******** (see Notes section below on how to view unmasked data)

Registrant Organization:

Registrant Street: ***** ****

Registrant City: Newton

Registrant State/Province: New Jersey

Registrant Postal Code: 078**

Registrant Country: US

Registrant Phone: +*.**********

Registrant Phone Ext:

Registrant Fax:

Registrant Fax Ext:

Registrant Email: ********@*****.***

Registry Admin ID: Not Available From Registry

Admin Name: ******** ******** (see Notes section below on how to view unmasked data)

... |

Domain Name: JOHNSTECHTALK.COM

Registry Domain ID: 1795918838_DOMAIN_COM-VRSN

Registrar WHOIS Server: whois.godaddy.com

Registrar URL: http://www.godaddy.com

Updated Date: 2017-03-27T00:52:50Z

Creation Date: 2013-04-23T00:54:17Z

Registrar Registration Expiration Date: 2019-04-23T00:54:17Z

Registrar: GoDaddy.com, LLC

Registrar IANA ID: 146

Registrar Abuse Contact Email: abuse@godaddy.com

Registrar Abuse Contact Phone: +1.4806242505

Domain Status: clientTransferProhibited http://www.icann.org/epp#clientTransferProhibited

Domain Status: clientUpdateProhibited http://www.icann.org/epp#clientUpdateProhibited

Domain Status: clientRenewProhibited http://www.icann.org/epp#clientRenewProhibited

Domain Status: clientDeleteProhibited http://www.icann.org/epp#clientDeleteProhibited

Registry Registrant ID: Not Available From Registry

Registrant Name: ******** ******** (see Notes section below on how to view unmasked data)

Registrant Organization:

Registrant Street: ***** ****

Registrant City: Newton

Registrant State/Province: New Jersey

Registrant Postal Code: 078**

Registrant Country: US

Registrant Phone: +*.**********

Registrant Phone Ext:

Registrant Fax:

Registrant Fax Ext:

Registrant Email: ********@*****.***

Registry Admin ID: Not Available From Registry

Admin Name: ******** ******** (see Notes section below on how to view unmasked data)

...

So now we’re getting somewhere. So GoDaddy tries to force you to their web page an sell you stuff in any case. Not at all surprising for anyone who’s ever been a GoDaddy customer (includes yours truly). Because that’s what they do. But not all registrars do that.

Here’s a real-life example which made me decide this technique should be more broadly disseminated. I searched for information on a domain in Argentina:

$ whois buenosaires.com.ar

This TLD has no whois server, but you can access the whois database at

http://www.nic.ar/ |

This TLD has no whois server, but you can access the whois database at

http://www.nic.ar/

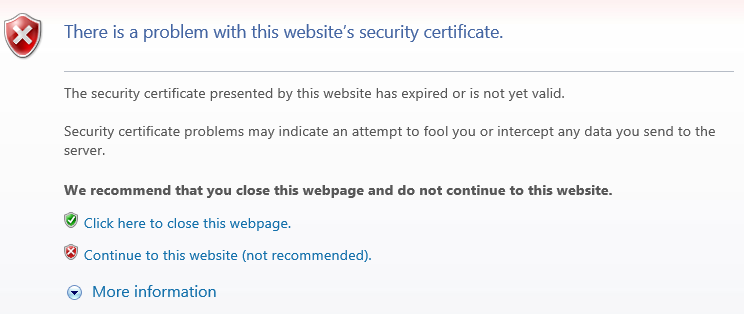

Now if you actually try their suggested whois server, it doesn’t even work:

$ whois ‐h www.nic.ar buenosaires.com.ar

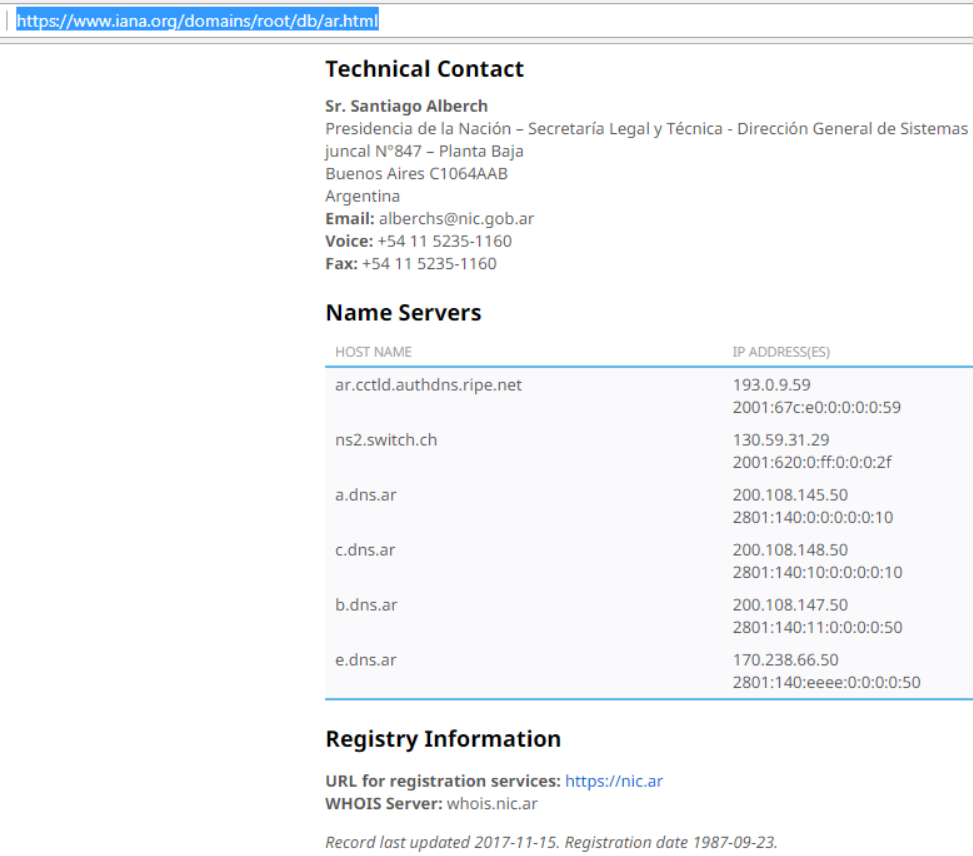

What you can do to find the correct whois server is use iana – Internet Assigned Numbers Authority – namely, this page:

https://www.iana.org/domains/root/db

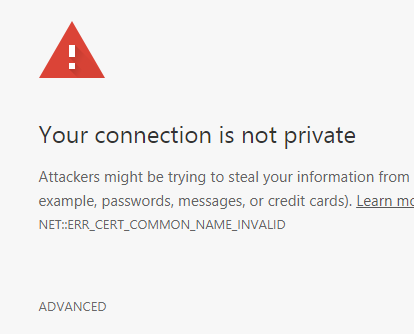

So for Argentina I clicked on .ar (I expected to find a separate listing for .com.ar but that was not the case), leading to the page:

See it? At the bottom it shows Whois server: whois.nic.ar. So I try that and voila, meaningful information is returned, no ads accompanying:

$ whois ‐h whois.nic.ar buenosaires.com.ar

% La información a la que estás accediendo se provee exclusivamente para

% fines relacionados con operaciones sobre nombres de dominios y DNS,

% quedando absolutamente prohibido su uso para otros fines.

%

% La DIRECCIÓN NACIONAL DEL REGISTRO DE DOMINIOS DE INTERNET es depositaria

% de la información que los usuarios declaran con la sola finalidad de

% registrar nombres de dominio en ‘.ar’, para ser publicada en el sitio web

% de NIC Argentina.

%

% La información personal que consta en la base de datos generada a partir

% del sistema de registro de nombres de dominios se encuentra amparada por

% la Ley N° 25326 “Protección de Datos Personales” y el Decreto

% Reglamentario 1558/01.

domain: buenosaires.com.ar

registrant: 50030338720

registrar: nicar

registered: 2012-07-05 00:00:00

changed: 2017-06-27 17:42:45.944889

expire: 2018-07-05 00:00:00

contact: 50030338720

name: TRAVEL RESERVATIONS SRL

registrar: nicar

created: 2013-09-05 00:00:00

changed: 2018-04-17 13:14:55.331068

nserver: ns-1588.awsdns-06.co.uk ()

nserver: ns-925.awsdns-51.net ()

nserver: ns-1385.awsdns-45.org ()

nserver: ns-239.awsdns-29.com ()

registrar: nicar

created: 2016-07-01 00:02:28.608837 |

% La información a la que estás accediendo se provee exclusivamente para

% fines relacionados con operaciones sobre nombres de dominios y DNS,

% quedando absolutamente prohibido su uso para otros fines.

%

% La DIRECCIÓN NACIONAL DEL REGISTRO DE DOMINIOS DE INTERNET es depositaria

% de la información que los usuarios declaran con la sola finalidad de

% registrar nombres de dominio en ‘.ar’, para ser publicada en el sitio web

% de NIC Argentina.

%

% La información personal que consta en la base de datos generada a partir

% del sistema de registro de nombres de dominios se encuentra amparada por

% la Ley N° 25326 “Protección de Datos Personales” y el Decreto

% Reglamentario 1558/01.

domain: buenosaires.com.ar

registrant: 50030338720

registrar: nicar

registered: 2012-07-05 00:00:00

changed: 2017-06-27 17:42:45.944889

expire: 2018-07-05 00:00:00

contact: 50030338720

name: TRAVEL RESERVATIONS SRL

registrar: nicar

created: 2013-09-05 00:00:00

changed: 2018-04-17 13:14:55.331068

nserver: ns-1588.awsdns-06.co.uk ()

nserver: ns-925.awsdns-51.net ()

nserver: ns-1385.awsdns-45.org ()

nserver: ns-239.awsdns-29.com ()

registrar: nicar

created: 2016-07-01 00:02:28.608837

2nd example: goto.jobs

I actually needed this one! So I learned of a domain goto.jobs and I wanted to get some background. So here goes…

$ whois goto.jobs

getaddrinfo(jobswhois.verisign-grs.com): Name or service not known |

getaddrinfo(jobswhois.verisign-grs.com): Name or service not known

So off to a bad start, right? So we hit up the .jobs link on iana, https://www.iana.org/domains/root/db/jobs.html, and we spy a reference to their whois server:

Registry Information

This domain is managed under ICANN's registrar system. You may register domains in .JOBS through an ICANN accredited registrar. The official list of ICANN accredited registrars is available on ICANN's website.

URL for registration services: http://www.goto.jobs

WHOIS Server: whois.nic.jobs |

Registry Information

This domain is managed under ICANN's registrar system. You may register domains in .JOBS through an ICANN accredited registrar. The official list of ICANN accredited registrars is available on ICANN's website.

URL for registration services: http://www.goto.jobs

WHOIS Server: whois.nic.jobs

So we try that:

$ whois ‐h whois.nic.jobs goto.jobs

Domain Name: GOTO.JOBS

Registry Domain ID: 91478530_DOMAIN_JOBS-VRSN

Registrar WHOIS Server: whois-all.nameshare.com

Registrar URL: http://www.nameshare.com

Updated Date: 2018-03-29T20:08:46Z

Creation Date: 2010-02-04T23:54:33Z

Registry Expiry Date: 2019-02-04T23:54:33Z

Registrar: Name Share, Inc

Registrar IANA ID: 667

Registrar Abuse Contact Email:

Registrar Abuse Contact Phone:

Domain Status: clientTransferProhibited https://icann.org/epp#clientTransferProhibited

Name Server: KATE.NS.CLOUDFLARE.COM

Name Server: MARK.NS.CLOUDFLARE.COM

Name Server: NS1.REGISTRY.JOBS

Name Server: NS2.REGISTRY.JOBS

DNSSEC: unsigned

URL of the ICANN Whois Inaccuracy Complaint Form: https://www.icann.org/wicf/

>>> Last update of WHOIS database: 2018-04-23T18:54:31Z <<< |

Domain Name: GOTO.JOBS

Registry Domain ID: 91478530_DOMAIN_JOBS-VRSN

Registrar WHOIS Server: whois-all.nameshare.com

Registrar URL: http://www.nameshare.com

Updated Date: 2018-03-29T20:08:46Z

Creation Date: 2010-02-04T23:54:33Z

Registry Expiry Date: 2019-02-04T23:54:33Z

Registrar: Name Share, Inc

Registrar IANA ID: 667

Registrar Abuse Contact Email:

Registrar Abuse Contact Phone:

Domain Status: clientTransferProhibited https://icann.org/epp#clientTransferProhibited

Name Server: KATE.NS.CLOUDFLARE.COM

Name Server: MARK.NS.CLOUDFLARE.COM

Name Server: NS1.REGISTRY.JOBS

Name Server: NS2.REGISTRY.JOBS

DNSSEC: unsigned

URL of the ICANN Whois Inaccuracy Complaint Form: https://www.icann.org/wicf/

>>> Last update of WHOIS database: 2018-04-23T18:54:31Z <<<

Better, but it seems to merely point to a registrar and its whois server:

Registrar WHOIS Server: whois-all.nameshare.com

So let’s try that:

$ whois ‐h whois-all.nameshare.com goto.jobs

Domain Name: GOTO.JOBS

Registry Domain ID: 91478530_DOMAIN_JOBS-VRSN

Registrar WHOIS Server: whois-jobs.nameshare.com

Registrar URL: http://www.nameshare.com

Updated Date: 2018-03-29T20:08:46Z

Creation Date: 2010-02-04T23:54:33Z

Registrar Registration Expiration Date: 2017-02-04T23:54:33Z

Registrar: NameShare, Inc.

Registrar IANA ID: 667

Registrar Abuse Contact Email: abuse-2014-2@encirca.com

Registrar Abuse Contact Phone: +1.7809429975

Domain Status: clientTransferProhibited http://www.icann.org/epp#clientTransferProhibited

Registry Registrant ID:

Registrant Name: DNS Administrator

Registrant Organization: Employ Media LLC

Registrant Street: 3029 Prospect Avenue

Registrant City: Cleveland

Registrant State/Province: OH

Registrant Postal Code: 44115

Registrant Country: United States

Registrant Phone: +1.2064261500

Registrant Phone Ext:

Registrant Fax: +1.1111111111

Registrant Fax Ext:

Registrant Email: supportgoto@goto.jobs

Registry Admin ID:

Admin Name: DNS Administrator

Admin Organization: Employ Media LLC

Admin Street: 3029 Prospect Avenue

... |

Domain Name: GOTO.JOBS

Registry Domain ID: 91478530_DOMAIN_JOBS-VRSN

Registrar WHOIS Server: whois-jobs.nameshare.com

Registrar URL: http://www.nameshare.com

Updated Date: 2018-03-29T20:08:46Z

Creation Date: 2010-02-04T23:54:33Z

Registrar Registration Expiration Date: 2017-02-04T23:54:33Z

Registrar: NameShare, Inc.

Registrar IANA ID: 667

Registrar Abuse Contact Email: abuse-2014-2@encirca.com

Registrar Abuse Contact Phone: +1.7809429975

Domain Status: clientTransferProhibited http://www.icann.org/epp#clientTransferProhibited

Registry Registrant ID:

Registrant Name: DNS Administrator

Registrant Organization: Employ Media LLC

Registrant Street: 3029 Prospect Avenue

Registrant City: Cleveland

Registrant State/Province: OH

Registrant Postal Code: 44115

Registrant Country: United States

Registrant Phone: +1.2064261500

Registrant Phone Ext:

Registrant Fax: +1.1111111111

Registrant Fax Ext:

Registrant Email: supportgoto@goto.jobs

Registry Admin ID:

Admin Name: DNS Administrator

Admin Organization: Employ Media LLC

Admin Street: 3029 Prospect Avenue

...

Bingo! We have hit pay dirt. We have meaningful information about the registrant – an address, phone number and email address – and received no obnoxious ads in return. For me it’s worth the extra steps.

ICANN: another alternative

Most registrar’s whois sites are rate-limited. ICANN’s is not. And they also do not sic ads on you. It is

https://whois.icann.org/en/lookup?name=

ICANN, for the record, it the body that decides what goes on in DNS namespace, for instance, what new gTLDS should be added. You can use its whois tool for all gTLDs, but not in general for ccTLDs.

whois is undergoing changes due to GDPR. Especially the “social” information of the contacts: registrant, admin and technical contacts will be masked, except for perhaps state and country, in the future. But whois is slowly dying and a new standard called RDAP will take its place.

References and related

This page has some great tips. Wish I had seen it first! https://superuser.com/questions/758647/how-to-whois-new-tlds

Here’s that iana root zone database link again: https://www.iana.org/domains/root/db

ICANN’s whois (only works for dot com): https://whois.icann.org/en/lookup?name=