Intro

I don’t overtly chase search engine rankings. I’m comfortable being the 2,000,000th most visited site on the Internet, or something like that according to alexa. But I still take pride in what I’m producing here. So when I read a couple weeks ago that Google would be boosting the search rank of sites which use encryption, I felt I had to act. For me it is equally a matter of showing that I know how to do it and a chance to write another blog posting which may help others.

Very, very few people have my situation, which is a self-hosted web site, but still there may be snippets of advice which may apply to other situations.

I pulled off the switch to using https instead of http last night. The detail of how I did it are below.

The details

Actually there was nothing earth-shattering. It was a simple matter of applying stuff i already know how to do and putting it all together. of course I made some glitches along the way, but I also resolved them.

First the CERT

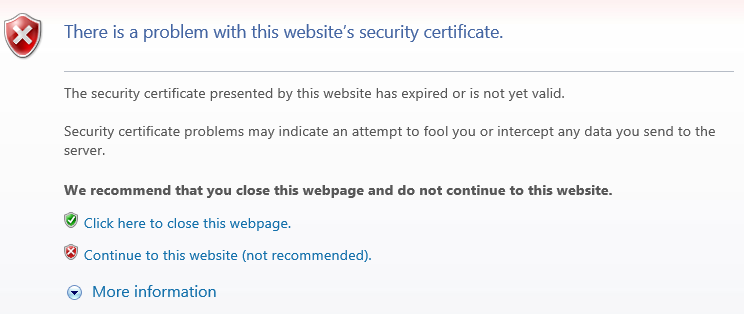

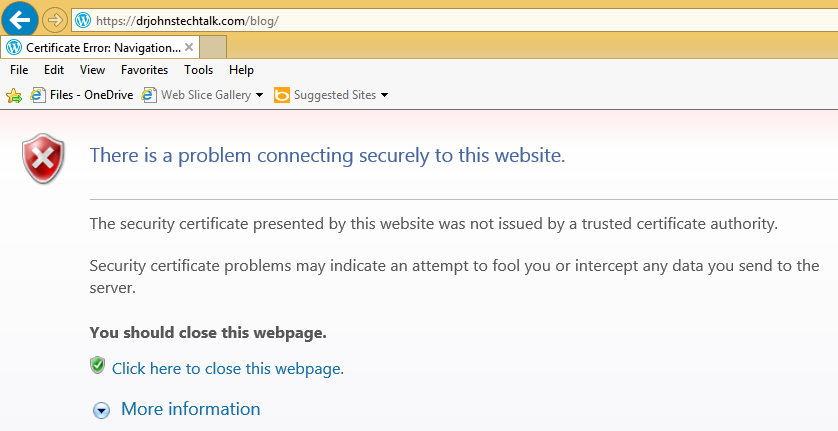

I was already running an SSL web server virtual server at https://drjohnstechtalk.com/ , but it was using a self-signed certificate. Being knowledgeable about certificates, I knew the first and easiest thing to do was to get a certificate (cert) issued by a recognized certificate authority (CA). Since my domain was bought from GoDaddy I decided to get my SSL certificate form them as well. It’s $69.99 for a one-year cert. Strangely, there is no economy of scale so a two-year cert costs exactly twice as much. i normally am a strong believer in two-year certs simply to avoid the hassle of renewing, etc, but since I am out-of-practice and feared I could throw my money away if I messed up the cert, I went with a one-year cert this time. It turns out I had nothing to fear…

Paying for a certificate at GoDaddy is easy. Actually figuring out how to get your certificate issued by them? Not so much. But I figured out where to go on their web site and managed to do it.

Before the CERT, the CSR

Let’s back up. Remember I’m self-hosted? I love being the boss and having that Linux prompt on my CentOS VM. So before I could buy a legit cert I needed to generate a private key and certificate signing request (CSR), which I did using openssl, having no other fancy tools available and being a command line lover.

To generate the private key and CSR with one openssl command do this:

$ openssl req -new -nodes -out myreq.csr

It prompts you for field values, e.g.:

Here’s how that dialog went:

Generating a 2048 bit RSA private key

.............+++

..............................+++

writing new private key to 'privkey.pem'

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:US

State or Province Name (full name) []:New Jersey

Locality Name (eg, city) [Default City]:

Organization Name (eg, company) [Default Company Ltd]:drjohnstechtalk.com

Organizational Unit Name (eg, section) []:

Common Name (eg, your name or your server's hostname) []:drjohnstechtalk.com

Email Address []:

Please enter the following 'extra' attributes

to be sent with your certificate request

A challenge password []:

An optional company name []: |

Generating a 2048 bit RSA private key

.............+++

..............................+++

writing new private key to 'privkey.pem'

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:US

State or Province Name (full name) []:New Jersey

Locality Name (eg, city) [Default City]:

Organization Name (eg, company) [Default Company Ltd]:drjohnstechtalk.com

Organizational Unit Name (eg, section) []:

Common Name (eg, your name or your server's hostname) []:drjohnstechtalk.com

Email Address []:

Please enter the following 'extra' attributes

to be sent with your certificate request

A challenge password []:

An optional company name []:

and the files it created:

$ ls -ltr|tail -2

-rw-rw-r-- 1 john john 1704 Aug 23 09:52 privkey.pem

-rw-rw-r-- 1 john john 1021 Aug 23 09:52 myreq.csr |

-rw-rw-r-- 1 john john 1704 Aug 23 09:52 privkey.pem

-rw-rw-r-- 1 john john 1021 Aug 23 09:52 myreq.csr

Before shipping it off to a CA you really ought to examine the CSR for accuracy. Here’s how:

$ openssl req -text -in myreq.csr

Certificate Request:

Data:

Version: 0 (0x0)

Subject: C=US, ST=New Jersey, L=Default City, O=drjohnstechtalk.com, CN=drjohnstechtalk.com

Subject Public Key Info:

Public Key Algorithm: rsaEncryption

Public-Key: (2048 bit)

Modulus:

00:e9:04:ab:7e:e1:c1:87:44:fb:fe:09:e1:8d:e5:

29:1c:cb:b5:e8:d0:cc:f4:89:67:23:ab:e5:e7:a6:

... |

Certificate Request:

Data:

Version: 0 (0x0)

Subject: C=US, ST=New Jersey, L=Default City, O=drjohnstechtalk.com, CN=drjohnstechtalk.com

Subject Public Key Info:

Public Key Algorithm: rsaEncryption

Public-Key: (2048 bit)

Modulus:

00:e9:04:ab:7e:e1:c1:87:44:fb:fe:09:e1:8d:e5:

29:1c:cb:b5:e8:d0:cc:f4:89:67:23:ab:e5:e7:a6:

...

What are we looking for, anyways? Well, the modulus should be the same as it is for the private key. To list the modulus of your private key:

$ openssl rsa -text -in privkey.pem|more

The other things I am looking for is the common name (CN) which has to exactly match the DNS name that is used to access the secure site.

I’m not pleased about the Default City, but I didn’t want to provide my actual city. We’ll see it doesn’t matter in the end.

For some CAs the Organization field also matters a great deal. Since I am a private individual I decided to use the CN as my organization and that was accepted by GoDaddy. So Probably its value also doesn’t matter.

the other critical thing is the length of the public key, 2048 bits. These days all keys should be 2048 bits. some years ago 1024 bits was perfectly fine. I’m not sure but maybe older openssl releases would have created a 1024 bit key length so that’s why you’ll want to watch out for that.

Examine the CERT

GoDaddy issued the certificate with some random alpha-numeric filename. i renamed it to something more suitable, drjohnstechtalk.crt. Let’s examine it:

$ openssl x509 -text -in drjohnstechtalk.crt|more

Certificate:

Data:

Version: 3 (0x2)

Serial Number:

27:ab:99:79:cb:55:9f

Signature Algorithm: sha256WithRSAEncryption

Issuer: C=US, ST=Arizona, L=Scottsdale, O=GoDaddy.com, Inc., OU=http://certs.godaddy.com/repository/, CN=Go Daddy Secure Certificate Authority - G2

Validity

Not Before: Aug 21 00:34:01 2014 GMT

Not After : Aug 21 00:34:01 2015 GMT

Subject: OU=Domain Control Validated, CN=drjohnstechtalk.com

Subject Public Key Info:

Public Key Algorithm: rsaEncryption

Public-Key: (2048 bit)

Modulus:

00:e9:04:ab:7e:e1:c1:87:44:fb:fe:09:e1:8d:e5:

29:1c:cb:b5:e8:d0:cc:f4:89:67:23:ab:e5:e7:a6:

...

So we’re checking that common name, key length, and what if any organization they used (in the Subject field). Also the modulus should match up. Note that they “cheaped out” and did not provide www.drjohnstechtalk.com as an explicit alternate name! In my opinion this should mean that if someone enters the URL https://www.drjohnstechtalk.com/ they should get a certificate name mismatch error. In practice this does not seem to happen – I’m not sure why. Probably the browsers are somewhat forgiving.

The apache side of the house

I don’t know if this is going to make any sense, but here goes. To begin I have a bare-bones secure virtual server that did essentially nothing. So I modified it to be an apache redirect factory and to use my brand shiny new legit certificate. Once I had that working I planned to swap roles and filenames with my regular configuration file, drjohns.conf.

Objective: don’t break existing links

Why the need for a redirect factory? This I felt as a matter of pride is important: it will permit all the current links to my site, which are all http, not https, to continue to work! That’s a good thing, right? Now most of those links are in search engines, which constantly comb my pages, so I’m sure over time they would automatically be updated if I didn’t bother, but I just felt better about knowing that no links would be broken by switching to https. And it shows I know what I’m doing!

The secure server configuration file on my server is in /etc/apache2/sites-enabled/drjohns.secure.conf. It’s an apache v 2.2 server. I put all the relevant key/cert/intermediate cert files in /etc/apache2/certs. the private key’s permissions were set to 600. The relevant apache configuration directives are here to use this CERT along with the GoDaddy intermediate certificates:

SSLEngine on

SSLCertificateFile /etc/apache2/certs/drjohnstechtalk.crt

SSLCertificateKeyFile /etc/apache2/certs/drjohnstechtalk.key

SSLCertificateChainFile /etc/apache2/certs/gd_bundle-g2-g1.crt |

SSLEngine on

SSLCertificateFile /etc/apache2/certs/drjohnstechtalk.crt

SSLCertificateKeyFile /etc/apache2/certs/drjohnstechtalk.key

SSLCertificateChainFile /etc/apache2/certs/gd_bundle-g2-g1.crt

I initially didn’t include the intermediate certs (chain file), which again in my experience should have caused issues. Once again I didn’t observe any issues from omitting it, but my experience says that it should be present.

The redirect factory setup

For the redirect testing I referred to my own blog posting (which I think is underappreciated for whatever reason!) and have these lines:

# I really don't think this does anything other than chase away a scary warning in the error log...

RewriteLock ${APACHE_LOCK_DIR}/rewrite_lock

<VirtualHost *:80>

ServerAdmin webmaster@localhost

ServerName www.drjohnstechtalk.com

ServerAlias drjohnstechtalk.com

ServerAlias johnstechtalk.com

ServerAlias www.johnstechtalk.com

ServerAlias vmanswer.com

ServerAlias www.vmanswer.com

# Inspired by the dreadful documentation on http://httpd.apache.org/docs/2.0/mod/mod_rewrite.html

RewriteEngine on

RewriteMap redirectMap prg:redirect.pl

RewriteCond ${redirectMap:%{HTTP_HOST}%{REQUEST_URI}} ^(.+)$

# %N are backreferences to RewriteCond matches, and $N are backreferences to RewriteRule matches

RewriteRule ^/.* %1 [R=301,L] |

# I really don't think this does anything other than chase away a scary warning in the error log...

RewriteLock ${APACHE_LOCK_DIR}/rewrite_lock

<VirtualHost *:80>

ServerAdmin webmaster@localhost

ServerName www.drjohnstechtalk.com

ServerAlias drjohnstechtalk.com

ServerAlias johnstechtalk.com

ServerAlias www.johnstechtalk.com

ServerAlias vmanswer.com

ServerAlias www.vmanswer.com

# Inspired by the dreadful documentation on http://httpd.apache.org/docs/2.0/mod/mod_rewrite.html

RewriteEngine on

RewriteMap redirectMap prg:redirect.pl

RewriteCond ${redirectMap:%{HTTP_HOST}%{REQUEST_URI}} ^(.+)$

# %N are backreferences to RewriteCond matches, and $N are backreferences to RewriteRule matches

RewriteRule ^/.* %1 [R=301,L]

Pages look funny after the switch to SSL

One of the first casualties after the switch to SSL was that my pages looked funny. I know from general experience that this can happen if there is hard-wired links to http URLs, and that is what I observed in the page source. In particular my WP-Syntax plugin was now bleeding verbatim entries into the columns to the right if the PRE text contained long lines. Not pretty. The page source mostly had https includes, but in one place it did not. It had:

<link rel="stylesheet" href="http://drjohnstechtalk.com/blog/wp-content/plugins/wp-syntax/wp-syntax.css" |

<link rel="stylesheet" href="http://drjohnstechtalk.com/blog/wp-content/plugins/wp-syntax/wp-syntax.css"

I puzzled over where that originated and I had a few ideas which didn’t turn out so well. For instance you’d think inserting this into wp-config.php would have worked:

define( 'WP_CONTENT_URL','https://drjohnstechtalk.com/blog/wp-content/'); |

define( 'WP_CONTENT_URL','https://drjohnstechtalk.com/blog/wp-content/');

But it had absolutely no effect. Finally I did an RTFM – the M being http://codex.wordpress.org/Editing_wp-config.php which is mentioned in wp-config.hp – and learned that the siteurl is set in the administration settings in the GUI, Settings|General WordPress Address URL and Site Address URL. I changed these to https://drjohnstechtalk.com/blog and bingo, my plugins began to work properly again!

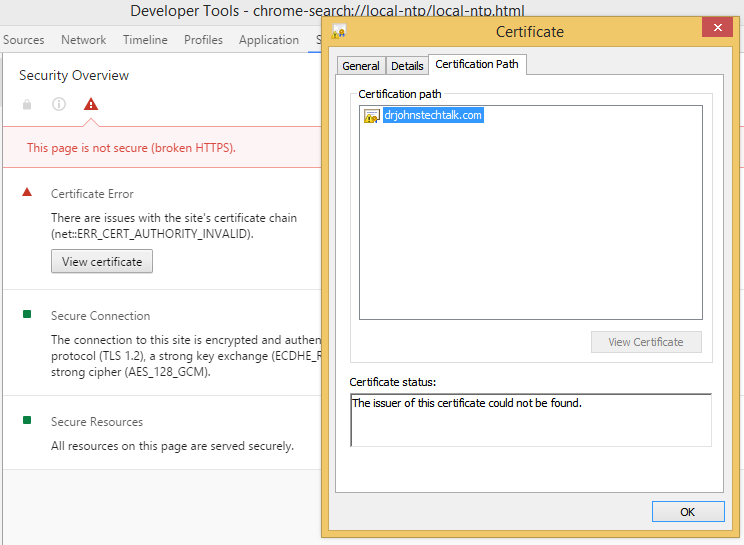

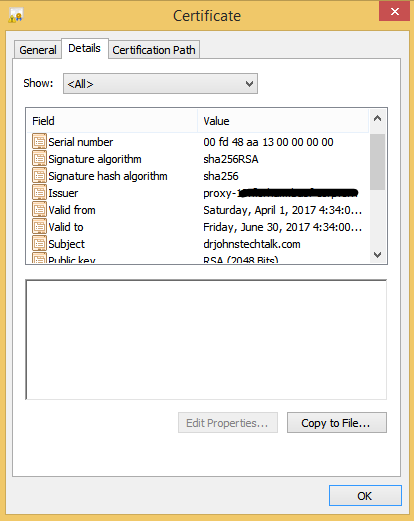

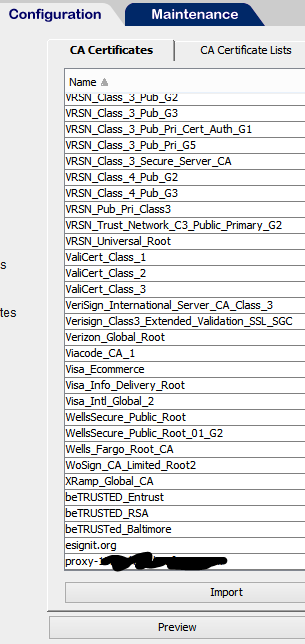

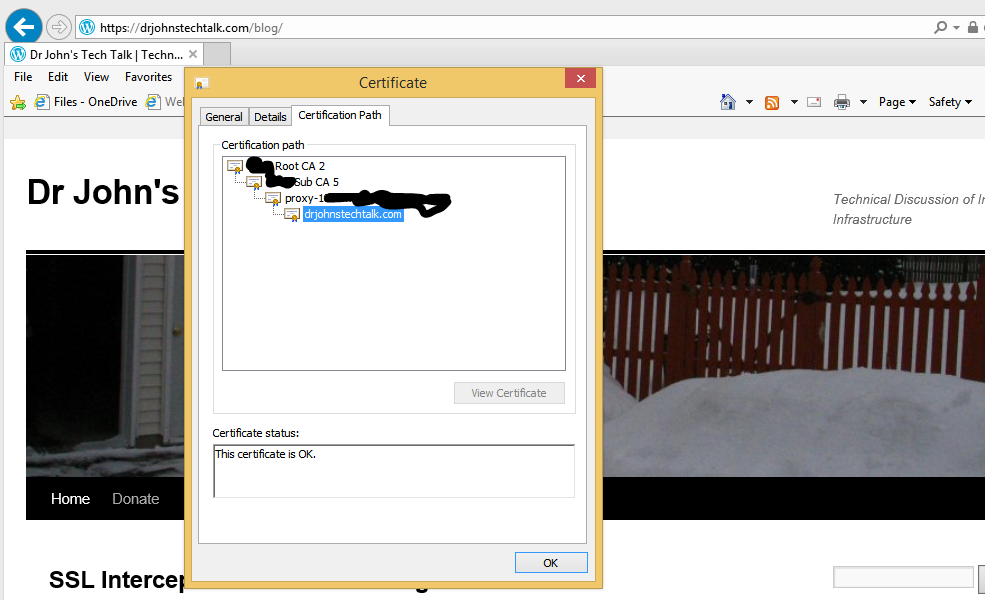

What might go wrong when turning on SSL

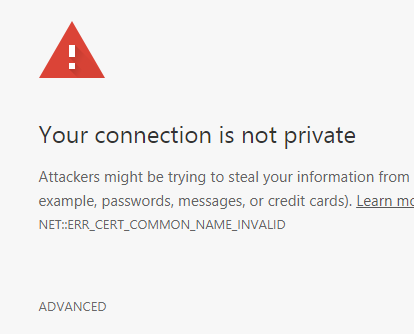

In another context I have seen both those errors, which I feel are poorly documented on the Internet, so I wish to mention them here since they are closely related to the topic of this blog post.

SSL routines:SSL23_GET_SERVER_HELLO:unknown protocol:s23_clnt.c:601 |

SSL routines:SSL23_GET_SERVER_HELLO:unknown protocol:s23_clnt.c:601

and

curl: (35) error:140770FC:SSL routines:SSL23_GET_SERVER_HELLO:unknown protocol |

curl: (35) error:140770FC:SSL routines:SSL23_GET_SERVER_HELLO:unknown protocol

I generated the first error in the process of trying to look at the SSL web site using openssl. What I do to test that certificates are being properly presented is:

$ openssl s_client -showcerts -connect localhost:443

And the second error mentioned above I generated trying to use curl to do something similar:

$ curl -i -k https://localhost/

The solution? Well, I began to suspect that I wasn’t running SSL at all so I tested curl assuming I was running on tcp port 443 but regular http:

$ curl -i http://localhost:443/

Yup. That worked just fine, producing all the usual HTTP response headers plus the content of my home page. So that means I wasn’t running SSL at all.

This virtual host being from a template I inherited and one I didn’t fully understand, I decided to just junk the most suspicious parts of the vhost configuration, which in my case were:

<IfDefine SSL>

<IfDefine !NOSSL>

...

</IfDefine>

</IfDefine> |

<IfDefine SSL>

<IfDefine !NOSSL>

...

</IfDefine>

</IfDefine>

and comment those guys out, giving,

#<IfDefine SSL>

#<IfDefine !NOSSL>

...

#</IfDefine>

#</IfDefine> |

#<IfDefine SSL>

#<IfDefine !NOSSL>

...

#</IfDefine>

#</IfDefine>

That worked! After a restart I really was running SSL.

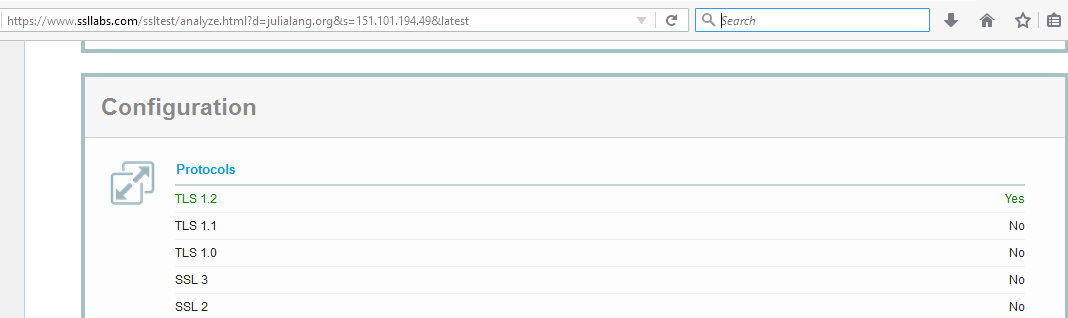

Making it stronger

I did not do this immediately, but after the POODLE vulnerability came out and I ran some tests I realized I should have explicitly chosen a cipher suite in my apache server to make the encryption sufficiently unbreakable. This section of my working with ciphers post shows some good settings.

Mixed content

I forgot about something in my delight at running my own SSL web server – not all content was coming to the browser as https and Firefox and Internet Explorer began to complain as they grew more security-conscious over the months. After some investigation I found that what it was is that I had a redirect for favicon.ico to the WordPress favicon.ico. But it was a redirect to their HTTP favicon.ico. I changed it to their secure version, https://s2.wp.com/i/favicon.ico, and all was good!

I never use the Firefox debugging tools so I got lucky. I took a guess to find out more about this mixed content and clicked on Tools|Web developer|Web console. My lucky break was that it immediately told me the element that was still HTTP amidst my HTTPS web page. Knowing that it was a cinch to fix it as mentioned above.

Conclusion

Good-ole search engine optimization (SEO) has prodded us to make the leap to run SSL. In this posting we showed how we did that while preserving all the links that may be floating ou there on the Internet by using our redirect factory.

References

Having an apache instance dedicated to redirects is described in this article.

Some common sense steps to protect your apache server are described here.

Some other openssl commands besides the ones used here are described here.

Choosing an appropriate cipher suite and preventing use of the vulnerable SSLv2/3 is described in this post.

I read about Google’s plans to encrypt the web in this Naked Security article.