Intro

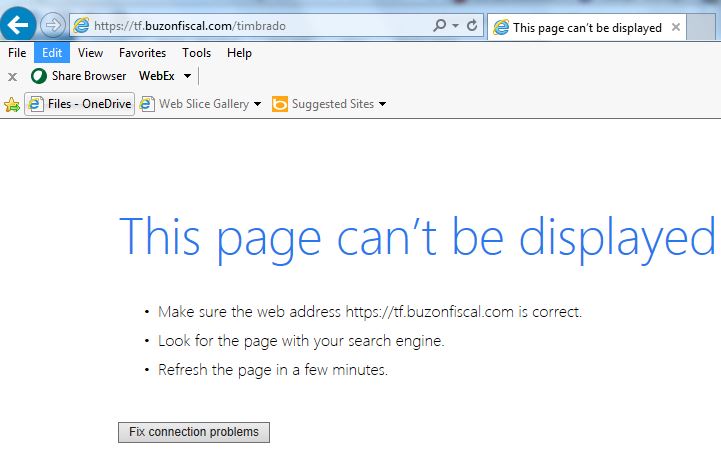

I don’t see such issues often, but today two came to my attention. Both are quasi-government sites. Here’s an example of what you see when testing with your browser if it’s Internet Explorer:

The details

Just for the fun of it, I accessed the home page https://tf.buzonfiscal.com/ and got a 200 OK page.

I learned some more about curl and found that it can tell you what is going on.

$ curl ‐vv ‐i ‐k https://tf.buzonfiscal.com/

* About to connect() to tf.buzonfiscal.com port 443 (#0) * Trying 23.253.28.70... connected * Connected to tf.buzonfiscal.com (23.253.28.70) port 443 (#0) * successfully set certificate verify locations: * CAfile: none CApath: /etc/ssl/certs/ * SSLv3, TLS handshake, Client hello (1): * SSLv3, TLS handshake, Server hello (2): * SSLv3, TLS handshake, CERT (11): * SSLv3, TLS handshake, Server key exchange (12): * SSLv3, TLS handshake, Server finished (14): * SSLv3, TLS handshake, Client key exchange (16): * SSLv3, TLS change cipher, Client hello (1): * SSLv3, TLS handshake, Finished (20): * SSLv3, TLS change cipher, Client hello (1): * SSLv3, TLS handshake, Finished (20): * SSL connection using DHE-RSA-AES256-SHA * Server certificate: * subject: C=MX; ST=Nuevo Leon; L=Monterrey; O=Diverza informacion y Analisis SAPI de CV; CN=*.buzonfiscal.com * start date: 2016-07-07 00:00:00 GMT * expire date: 2018-07-07 23:59:59 GMT * subjectAltName: tf.buzonfiscal.com matched * issuer: C=US; O=thawte, Inc.; CN=thawte SSL CA - G2 * SSL certificate verify ok. > GET / HTTP/1.1 > User-Agent: curl/7.19.7 (x86_64-suse-linux-gnu) libcurl/7.19.7 OpenSSL/0.9.8j zlib/1.2.3 libidn/1.10 > Host: tf.buzonfiscal.com > Accept: */* > < HTTP/1.1 200 OK HTTP/1.1 200 OK < Date: Fri, 22 Jul 2016 15:50:44 GMT Date: Fri, 22 Jul 2016 15:50:44 GMT < Server: Apache/2.2.4 (Win32) mod_ssl/2.2.4 OpenSSL/0.9.8e mod_jk/1.2.37 Server: Apache/2.2.4 (Win32) mod_ssl/2.2.4 OpenSSL/0.9.8e mod_jk/1.2.37 < Accept-Ranges: bytes Accept-Ranges: bytes < Content-Length: 23 Content-Length: 23 < Content-Type: text/html Content-Type: text/html < <html> 200 OK * Connection #0 to host tf.buzonfiscal.com left intact * Closing connection #0 * SSLv3, TLS alert, Client hello (1): |

Now look at the difference when we access the page with the problem.

$ curl ‐vv ‐i ‐k https://tf.buzonfiscal.com/timbrado

* About to connect() to tf.buzonfiscal.com port 443 (#0) * Trying 23.253.28.70... connected * Connected to tf.buzonfiscal.com (23.253.28.70) port 443 (#0) * successfully set certificate verify locations: * CAfile: none CApath: /etc/ssl/certs/ * SSLv3, TLS handshake, Client hello (1): * SSLv3, TLS handshake, Server hello (2): * SSLv3, TLS handshake, CERT (11): * SSLv3, TLS handshake, Server key exchange (12): * SSLv3, TLS handshake, Server finished (14): * SSLv3, TLS handshake, Client key exchange (16): * SSLv3, TLS change cipher, Client hello (1): * SSLv3, TLS handshake, Finished (20): * SSLv3, TLS change cipher, Client hello (1): * SSLv3, TLS handshake, Finished (20): * SSL connection using DHE-RSA-AES256-SHA * Server certificate: * subject: C=MX; ST=Nuevo Leon; L=Monterrey; O=Diverza informacion y Analisis SAPI de CV; CN=*.buzonfiscal.com * start date: 2016-07-07 00:00:00 GMT * expire date: 2018-07-07 23:59:59 GMT * subjectAltName: tf.buzonfiscal.com matched * issuer: C=US; O=thawte, Inc.; CN=thawte SSL CA - G2 * SSL certificate verify ok. > GET /timbrado HTTP/1.1 > User-Agent: curl/7.19.7 (x86_64-suse-linux-gnu) libcurl/7.19.7 OpenSSL/0.9.8j zlib/1.2.3 libidn/1.10 > Host: tf.buzonfiscal.com > Accept: */* > * SSLv3, TLS handshake, Hello request (0): * SSLv3, TLS handshake, Client hello (1): * SSLv3, TLS handshake, Server hello (2): * SSLv3, TLS handshake, CERT (11): * SSLv3, TLS handshake, Server key exchange (12): * SSLv3, TLS handshake, Request CERT (13): * SSLv3, TLS handshake, Server finished (14): * SSLv3, TLS handshake, CERT (11): * SSLv3, TLS handshake, Client key exchange (16): * SSLv3, TLS change cipher, Client hello (1): * SSLv3, TLS handshake, Finished (20): * SSLv3, TLS alert, Server hello (2): * SSL read: error:14094410:SSL routines:SSL3_READ_BYTES:sslv3 alert handshake failure, errno 0 * Empty reply from server * Connection #0 to host tf.buzonfiscal.com left intact curl: (52) SSL read: error:14094410:SSL routines:SSL3_READ_BYTES:sslv3 alert handshake failure, errno 0 * Closing connection #0 |

There is this line that we didn’t have before, which comes immediately after the server has received the GET request:

SSLv3, TLS handshake, Hello request (0):

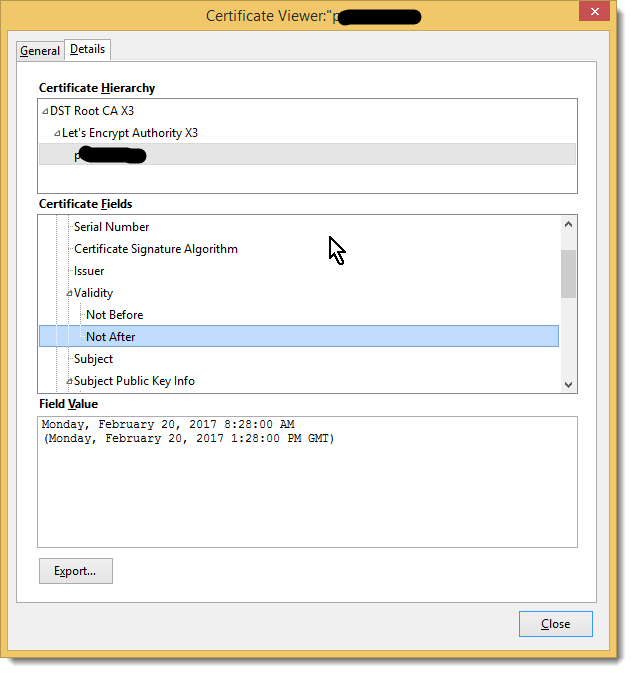

I think that is the server requesting a certificate from the client (sometimes known as a digital ID). You don’t see that often but in government web sites I guess it happens, especially in Latin America.

Lessons learned

I eventually have learned after all these years that the ‐vv switch in curl gives helpful information for debugging purposes like we need here.

I had naively assumed that if a site requires a client certificate it would require it for all pages. These two examples belie that assumption. Depedning on the URI, the behaviour of curl is completely different. In other words one page requires a client certificate and the other doesn’t.

Where to get this client certificate

In my experience the web site owner normally issues you your client certificate. You can try to use a random one, self-signed etc, but that’s extremely unlikely to work since they’ve already bothered with this high level of security why wold they throw that effort away and permit a certificate that they can not verify?