Intro

A large organization needed to have considerable flexibility in serving out its proxy PAC file to web browsers. The legacy approach – perl script on web servers – was replaced by a TCL script I developed which runs on one of their F5 load balancers. In this post I lay out the requirements and how I tackled an unfamiliar language, TCL, to come up with an efficient and elegant solution.

Even if you have no interest in PAC files, if you are developing an irule you’ll probably scratch your head figuring out the oddities of TCL programming. This post may help in that case too.

The irule

# - DrJ 8/3/17

when CLIENT_ACCEPTED {

set cip [IP::client_addr]

}

when HTTP_REQUEST {

set debug 0

# supply an X-DRJ-PAC, e.g., w/ curl, to debug: curl -H 'X-DRJ-PAC: 1.2.3.4' 50.17.188.196/proxy.pac

if {[HTTP::header exists "X-DRJ-PAC"]} {

# overwrite client ip from header value for debugging purposes

log local0. "DEBUG enabled. Original ip: $cip"

set cip [HTTP::header value "X-DRJ-PAC"]

set debug 1

log local0. "DEBUG. overwritten ip: $cip"

}

# security precaution: don't accept any old uri

if { ! ([HTTP::uri] starts_with "/proxy.pac" || [HTTP::uri] starts_with "/proxy/proxy.cgi") } {

drop

log local0. "uri: [HTTP::uri], drop section. cip: $cip"

} else {

#

set LDAPSUFFIX ""

if {[HTTP::uri] ends_with "cgi"} {

set LDAPSUFFIX "-ldap"

}

# determine which central location to use

if { [class match $cip equals PAC-subnet-list] } {

# If client IP is in the datagroup, send user to appropriate location

set LOCATION [class lookup $cip PAC-subnet-list]

if {$debug} {log local0. "DEBUG. match list: LOCATION: $LOCATION"}

} elseif { $cip ends_with "0" || $cip ends_with "1" || $cip ends_with "4" || $cip ends_with "5" } {

# client IP was not amongst the subnets, use matching of last digit of last octet to set the NJ proxy (01)

set LOCATION "01"

if {$debug} {log local0. "DEBUG. match last digit prefers NJ : LOCATION: $LOCATION"}

} else {

# set LA proxy (02) as the default choice

set LOCATION "02"

if {$debug} {log local0. "DEBUG. neither match list nor match digit matched: LOCATION: $LOCATION"}

}

HTTP::respond 200 content "

function FindProxyForURL(url, host)

{

// o365 and other enterprise sites handled by dedicated proxy...

var cesiteslist = \"*.aadrm.com;*.activedirectory.windowsazure.com;*.cloudapp.net;*.live.com;*.microsoft.com;*.microsoftonline-p.com;*.microsoftonline-p.net;*.microsoftonline.com;*.microsoftonlineimages.com;*.microsoftonlinesupport.net;*.msecnd.net;*.msn.co.jp;*.msn.co.uk;*.msn.com;*.msocdn.com;*.office.com;*.office.net;*.office365.com;*.onmicrosoft.com;*.outlook.com;*.phonefactor.net;*.sharepoint.com;*.windows.net;*.live.net;*.msedge.net;*.onenote.com;*.windows.com\";

var cesites = cesiteslist.split(\";\");

for (var i = 0; i < cesites.length; ++i){

if (shExpMatch(host, cesites\[i\])) {

return \"PROXY http-ceproxy-$LOCATION.drjohns.net:8081\" ;

}

}

// client IP: $cip.

// Direct connections to local domain

if (dnsDomainIs(host, \"127.0.0.1\") ||

dnsDomainIs(host, \".drjohns.com\") ||

dnsDomainIs(host, \".drjohnstechtalk.com\") ||

dnsDomainIs(host, \".vmanswer.com\") ||

dnsDomainIs(host, \".johnstechtalk.com\") ||

dnsDomainIs(host, \"localdomain\") ||

dnsDomainIs(host, \".drjohns.net\") ||

dnsDomainIs(host, \".local\") ||

shExpMatch(host, \"10.*\") ||

shExpMatch(host, \"192.168.*\") ||

shExpMatch(host, \"172.16.*\") ||

isPlainHostName(host)

) {

return \"DIRECT\";

}

else

{

return \"PROXY http-proxy-$LOCATION$LDAPSUFFIX.drjohns.net:8081\" ;

}

}

" \

"Content-Type" "application/x-ns-proxy-autoconfig" \

"Expires" "[clock format [expr ([clock seconds]+7200)] -format "%a, %d %h %Y %T GMT" -gmt true]"

}

} |

# - DrJ 8/3/17

when CLIENT_ACCEPTED {

set cip [IP::client_addr]

}

when HTTP_REQUEST {

set debug 0

# supply an X-DRJ-PAC, e.g., w/ curl, to debug: curl -H 'X-DRJ-PAC: 1.2.3.4' 50.17.188.196/proxy.pac

if {[HTTP::header exists "X-DRJ-PAC"]} {

# overwrite client ip from header value for debugging purposes

log local0. "DEBUG enabled. Original ip: $cip"

set cip [HTTP::header value "X-DRJ-PAC"]

set debug 1

log local0. "DEBUG. overwritten ip: $cip"

}

# security precaution: don't accept any old uri

if { ! ([HTTP::uri] starts_with "/proxy.pac" || [HTTP::uri] starts_with "/proxy/proxy.cgi") } {

drop

log local0. "uri: [HTTP::uri], drop section. cip: $cip"

} else {

#

set LDAPSUFFIX ""

if {[HTTP::uri] ends_with "cgi"} {

set LDAPSUFFIX "-ldap"

}

# determine which central location to use

if { [class match $cip equals PAC-subnet-list] } {

# If client IP is in the datagroup, send user to appropriate location

set LOCATION [class lookup $cip PAC-subnet-list]

if {$debug} {log local0. "DEBUG. match list: LOCATION: $LOCATION"}

} elseif { $cip ends_with "0" || $cip ends_with "1" || $cip ends_with "4" || $cip ends_with "5" } {

# client IP was not amongst the subnets, use matching of last digit of last octet to set the NJ proxy (01)

set LOCATION "01"

if {$debug} {log local0. "DEBUG. match last digit prefers NJ : LOCATION: $LOCATION"}

} else {

# set LA proxy (02) as the default choice

set LOCATION "02"

if {$debug} {log local0. "DEBUG. neither match list nor match digit matched: LOCATION: $LOCATION"}

}

HTTP::respond 200 content "

function FindProxyForURL(url, host)

{

// o365 and other enterprise sites handled by dedicated proxy...

var cesiteslist = \"*.aadrm.com;*.activedirectory.windowsazure.com;*.cloudapp.net;*.live.com;*.microsoft.com;*.microsoftonline-p.com;*.microsoftonline-p.net;*.microsoftonline.com;*.microsoftonlineimages.com;*.microsoftonlinesupport.net;*.msecnd.net;*.msn.co.jp;*.msn.co.uk;*.msn.com;*.msocdn.com;*.office.com;*.office.net;*.office365.com;*.onmicrosoft.com;*.outlook.com;*.phonefactor.net;*.sharepoint.com;*.windows.net;*.live.net;*.msedge.net;*.onenote.com;*.windows.com\";

var cesites = cesiteslist.split(\";\");

for (var i = 0; i < cesites.length; ++i){

if (shExpMatch(host, cesites\[i\])) {

return \"PROXY http-ceproxy-$LOCATION.drjohns.net:8081\" ;

}

}

// client IP: $cip.

// Direct connections to local domain

if (dnsDomainIs(host, \"127.0.0.1\") ||

dnsDomainIs(host, \".drjohns.com\") ||

dnsDomainIs(host, \".drjohnstechtalk.com\") ||

dnsDomainIs(host, \".vmanswer.com\") ||

dnsDomainIs(host, \".johnstechtalk.com\") ||

dnsDomainIs(host, \"localdomain\") ||

dnsDomainIs(host, \".drjohns.net\") ||

dnsDomainIs(host, \".local\") ||

shExpMatch(host, \"10.*\") ||

shExpMatch(host, \"192.168.*\") ||

shExpMatch(host, \"172.16.*\") ||

isPlainHostName(host)

) {

return \"DIRECT\";

}

else

{

return \"PROXY http-proxy-$LOCATION$LDAPSUFFIX.drjohns.net:8081\" ;

}

}

" \

"Content-Type" "application/x-ns-proxy-autoconfig" \

"Expires" "[clock format [expr ([clock seconds]+7200)] -format "%a, %d %h %Y %T GMT" -gmt true]"

}

}

I know general programming concepts but before starting on this project, not how to realize my ideas in F5’s version of TCL. So I broke all the tasks into little pieces and demonstrated that I had mastered each one. I describe in this blog post all that I leanred.

How to use the browser’s IP in an iRule

If you’ve ever written an iRule you’ve probably used the section that starts with when HTTP_REQUEST. But that is not where you pick up the web browser’s IP. For that you go to a different section, when CLIENT_ACCEPTED. Then you throw it into a variable cip like this:

set cip [IP::client_addr]

And you can subsequently refer back to $cip in the when HTTP_REQUEST section.

How to send HTML (or Javascript) body content

It’s also not clear you can use an iRule by itself to send either HTML or Javascript in this case. After all until this point I’ve always had a back-end load balancer for that purpose. But in fact you don’t need a back-end web server at all. The secret is this line:

HTTP::respond 200 content "

function FindProxyForURL(url, host)

... |

HTTP::respond 200 content "

function FindProxyForURL(url, host)

...

So with this command you set the HTTP response status (200 is an OK) as well as send the body.

Variable interpolation in the body

If the body begins with ” it will do variable interpolation (that’s what we Perl programmers call it, anyway, where your variables like $cip get turned into their value before being delivered to the user). You can also begin the body with a {, but what follows that is a string literal which means no variable interpolation.

The bad thing about the ” character is that if your body contains the ” character, or a [ or ], you have to escape each and every one. And mine does – a lot of them in fact.

But if you use { you don’t have to escape characters, even $, but you also don’t have a way to say “these bits are variables, interpolate them.” So if your string is dynamic you pretty mcuh have to use “.

Defeat scanners

This irule will be the resource for a VS (virtual server) which effectively acts like a web server. So dumb enterprise scanners will probably come across it and scan for vulnerabilities, whether it makes sense or not. A common thing is for these scanners to scan with random URIs that some web servers are vulnerable to. My first implementation had this VS respond to any URI with the PAC file! I don’t think that’s desirable. Just my gut feeling. We’re expecting to be called by one of two different names. Hence this logic:

if { ! ([HTTP::uri] starts_with "/proxy.pac" || [HTTP::uri] starts_with "/proxy/proxy.cgi") } {

drop

...

else

(send PAC file) |

if { ! ([HTTP::uri] starts_with "/proxy.pac" || [HTTP::uri] starts_with "/proxy/proxy.cgi") } {

drop

...

else

(send PAC file)

The original match operator was equals, but I found that some rogue program actually appends ?Type=WMT to the normal PAC URL! How annoying. That rogue application, by the way, seems to be Windows Media Player. You can kind of see where they were goinog with this, for Windows Media PLayer you might want to present a different set of proxies, I suppose.

Match IP against a list of subnets and pull out value

Some background. Company has two proxies with identical names except one ends in 01, the other in 02. 01 and 02 are in different locales. So we created a data group of type address: PAC-subnet-list. The idea is you put in a subnet, e.g., 10.9.7.0/24 and a proxy value, either “01” or “02”. This TCL line checks if the client IP matches one of the subnets we’ve entered into the datagroup:

if { [class match $cip equals PAC-subnet-list] } { |

if { [class match $cip equals PAC-subnet-list] } {

Then this tcl line is used to match the client IP against one of those subnets and retrieve the value and store it into variable LOCATION:

set LOCATION [class lookup $cip PAC-subnet-list] |

set LOCATION [class lookup $cip PAC-subnet-list]

The reason for the datagroup is to have subnets with LAN-speed connection to one of the proxies use that proxy.

Something weird

Now something weird happens. For clients within a subnet that doesn’t match our list, we more-or-less distribute their use of both proxies equally. So at a remote site, users with IPs ending in 0, 1, 4, or 5 use proxy 01:

elseif { $cip ends_with "0" || $cip ends_with "1" || $cip ends_with "4" || $cip ends_with "5" } { |

elseif { $cip ends_with "0" || $cip ends_with "1" || $cip ends_with "4" || $cip ends_with "5" } {

and everyone else uses proxy 02. So users can be sitting right next to each other, each using a proxy at a different location.

Why didn’t we use a regular expression, besides the fact that we don’t know the syntax 😉 ? You read about regular expressions in the F5 Devcentral web site and the first thing it says is don’t use them! Use something else like start_with, ends_with, … I guess the alternatives will be more efficient.

Further complexity: different proxies if called by different name

Some specialized desktops are configured to use a PAC file which ends in /proxy/proxy.cgi. This PAC file hands out different proxies which do LDAP authentication, as opposed to NTLM/IWA authentication.. Hence the use of the variable LDAPSUFFIX. The rest of the logic is the same however.

Debugging help

I like this part – where it helps you debug the thing. Because you want to know what it’s really doing and that can be pretty hard to find out, right? You could run a trace but that’s not fun. So I create this way to do debugging.

if {[HTTP::header exists "X-DRJ-PAC"]} {

# overwrite client ip from header value for debugging purposes

log local0. "DEBUG enabled. Original ip: $cip"

set cip [HTTP::header value "X-DRJ-PAC"]

set debug 1

log local0. "DEBUG. overwritten ip: $cip"

} |

if {[HTTP::header exists "X-DRJ-PAC"]} {

# overwrite client ip from header value for debugging purposes

log local0. "DEBUG enabled. Original ip: $cip"

set cip [HTTP::header value "X-DRJ-PAC"]

set debug 1

log local0. "DEBUG. overwritten ip: $cip"

}

It checks for a custom HTTP request header, X-DRJ-PAC. You can call it with that header, from anywhere, and for the value put the client iP you wish to test, e.g., 1.2.3.4. That will overwrite the client IP varibale, cip, set the debug variable, and add some log lines which get nicely printed out into your /var/log/ltm file. So your ltm file may log info about your script’s goings-on like this:

Aug 8 14:06:48 f5drj1 info tmm[17767]: Rule /Common/PAC-irule : DEBUG enabled. Original ip: 11.195.136.89

Aug 8 14:06:48 f5drj1 info tmm[17767]: Rule /Common/PAC-irule : DEBUG. overwritten ip: 12.196.68.91

Aug 8 14:06:48 f5drj1 info tmm[17767]: Rule /Common/PAC-irule : DEBUG. match last digit prefers NJ : LOCATION: 01 |

Aug 8 14:06:48 f5drj1 info tmm[17767]: Rule /Common/PAC-irule : DEBUG enabled. Original ip: 11.195.136.89

Aug 8 14:06:48 f5drj1 info tmm[17767]: Rule /Common/PAC-irule : DEBUG. overwritten ip: 12.196.68.91

Aug 8 14:06:48 f5drj1 info tmm[17767]: Rule /Common/PAC-irule : DEBUG. match last digit prefers NJ : LOCATION: 01

And with curl it is not hard at all to send this custom header as I mention in the comments:

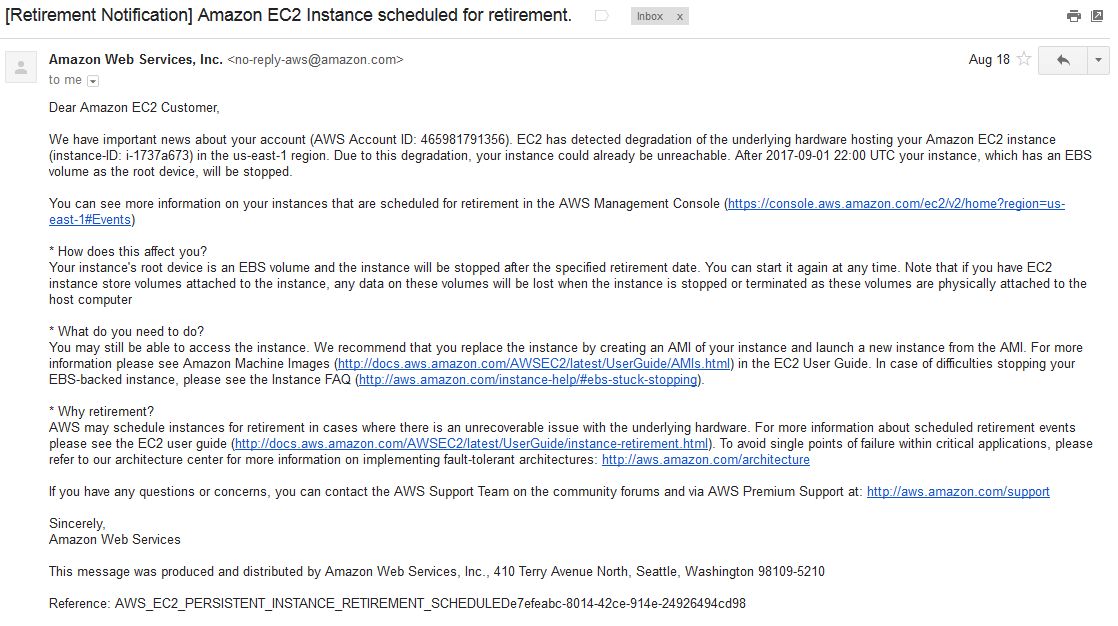

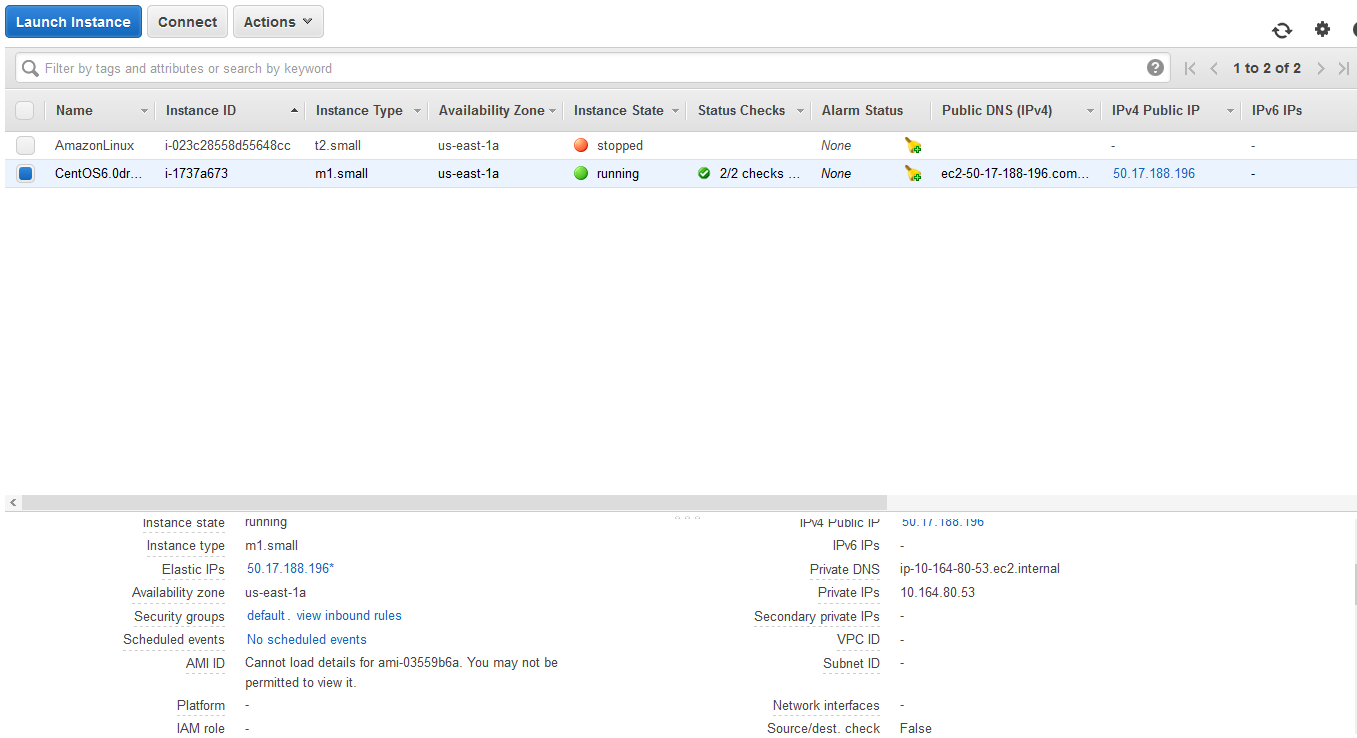

$ curl ‐H ‘X-DRJ-PAC: 12.196.88.91’ 50.17.188.196/proxy.pac

Expires header

We add an expires header so that the PAC file is good for two hours (7200 seconds). I don’t think it does much good but it seems like the right thing to do. Don’t ask me, I just stole the whole line from f5devcentral.

A PAC file should have the MIME type application/x-ns-proxy-autoconfig. So we set that explicit MIME type with

"Content-Type" "application/x-ns-proxy-autoconfig" |

"Content-Type" "application/x-ns-proxy-autoconfig"

The “\” at the end of some lines is a line continuation character.

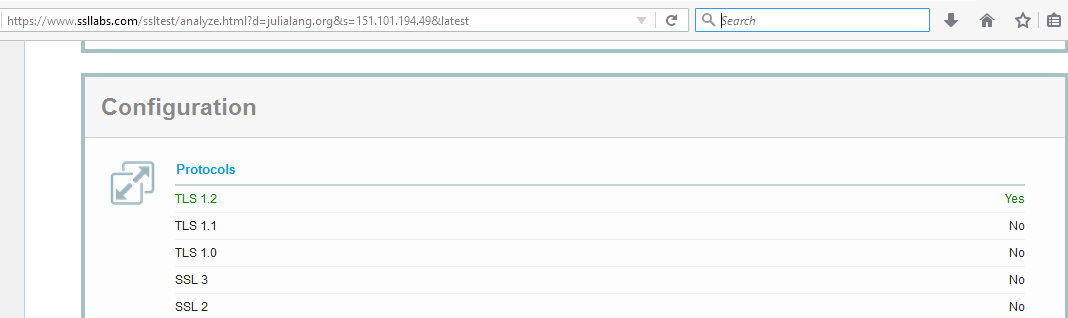

Performance

They basically need this to run several hundred times per second. Occasionally PAC file requests “go crazy.” Will it? This part I don’t know. It has yet to be battle-tested. But it is production tested. It’s been in production for over a week. The virtual server consumes 0% of the load balancer’s CPU, which is great. And for the record, the traffic is 0.17% of total traffic from the proxy server, so very modest. So at this point I believe it will survive a usage storm much better than my apache web servers did.

Why bother?

After I did all this work someone pointed out that this all could have been done within the Javascript of the PAC file itself! I hadn’t really thought that through. But we agreed that doesn’t feel right and may force the browser to do more evaluation than what we want. But maybe it would have executed once and the results cached somehow?? It’s hard to see how since each encountered web site could have potentially a different proxy or none at all so an evaluation should be done each time. So we always try to pass out a minimal PAC file for that reason.

Why not use Bluecoat native PAC handling ability?

Bluecoat proxySG is great at handing out a single, fixed PAC file. It’s not so good at handing out different PAC files, and I felt it was just too much work to force it to do so.

References and related

F5’s DevCentral site is invaluable and the place where I learned virtually everything that went into the irule shown above. devcentral.f5.com

Excessive calls to PAC file.

A more sophisticated PAC file example created by my colleague is shown here: https://drjohnstechtalk.com/blog/2021/03/tcl-irule-program-with-comments-for-f5-bigip/