Intro

I’m after a particular domain and have been for years. But as a matter of pride I don’t want to overpay for it, so I don’t want to go through an auction. There are services that can help grab a DNS domain immediately after it expires, but they all want $$. That may make sense for high-demand domains. Mine is pretty obscure. I want to grab it quickly – perhaps within a few seconds after it becomes available, but I don’t expect any competition for it. That is a description of domain drop catching.

Since I am already using GoDaddy as my registrar I thought I’d see if they have a domain catching service. They don’t which is strange because they have other specialized domain services such as domain broker. They have a service which is designed for much the same purpose, however, called backorder. That creates an auction bid for the domain before it has expired. The cost isn’t too bad, but since I started down a different path I will roll my own. Perhaps they have an API which can be used to create my own domain catcher? It turns out they do!

It involves understanding how to read a JSON data file, which is new to me, but otherwise it’s not too bad.

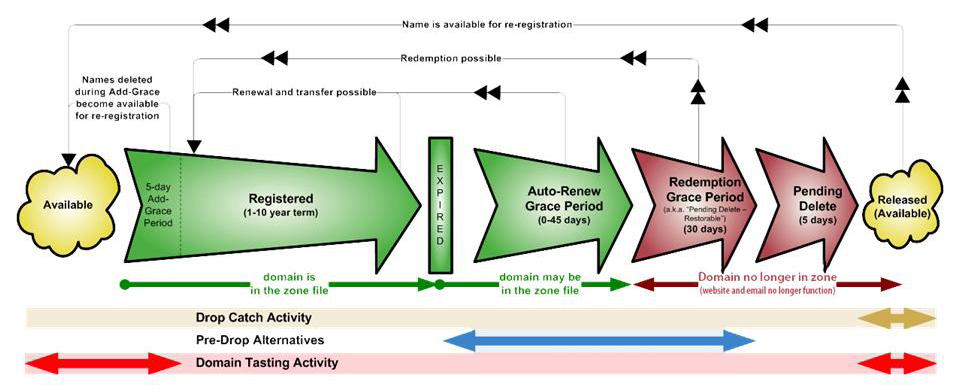

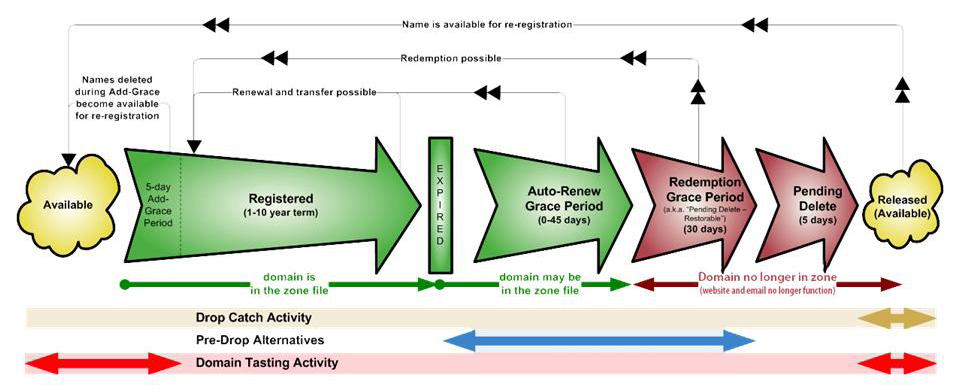

The domain lifecycle

This graphic from ICANN illustrates it perfectly for your typical global top-level domain such as .com, .net, etc:

To put it into words, there is the

initial registration,

optional renewals,

expiration date,

auto-renew grace period of 0 – 45 days,

redemption grace period of 30 days,

pending delete of 5 days, and then

it’s released and available.

So in domain drop catching we are keenly interested in being fully prepared for the pending delete five day window. From an old discussion I’ve read that the precise time .com domains are released is usually between 2 -3 PM EST.

A word about the GoDaddy developer site

It’s developer.godaddy.com. It looks like one day it will be a great site, but for now it is wanting in some areas. Most of the menu items are duds and are placeholders. Really there are only three (mostly) working sections: get started, documentation and demo. Get started is only a few words and one slender snippet of Ajax code, and the demo itself also extremely limited, so the only real resource they provide is Documentation. Documentation is designed as an active documentation that you can try out functions with your data. You run it and it shows you all the needed request headers and data as well as the received response. The thing is that it’s very finicky. It’s supposed to show all the available functions but I couldn’t get it to work under Firefox. And with Internet Explorer/Edge it only worked about half the time. It seems to help to access it with a newly launched browser. The documentation, as good as it is, leaves some things unsaid. I have found:

https://api.ote-godaddy.com/ – use for TEST. Maybe ote stands for optional test environment?

https://api.godaddy.com/ – for production (what I am calling PROD)

The TEST environment does not require authentication for some things that PROD does. This shell script for checking available domains, which I call available-test.sh, works in TEST but not in PROD:

#!/bin/sh

# pass domain as argument

# apparently no AUTH is rquired for this one

curl -k 'https://api.ote-godaddy.com/v1/domains/available?domain='$1'&checkType=FAST&forTransfer=false' |

#!/bin/sh

# pass domain as argument

# apparently no AUTH is rquired for this one

curl -k 'https://api.ote-godaddy.com/v1/domains/available?domain='$1'&checkType=FAST&forTransfer=false'

In PROD I had to insert the authorization information – the key and secret they showed me on the screen. I call this script available.sh.

#!/bin/sh

# pass domain as argument

curl -s -k -H 'Authorization: sso-key *******8m_PwFAffjiNmiCUrKe******:**FF73L********' 'https://api.godaddy.com/v1/domains/available?domain='$1'&checkType=FULL&forTransfer=false' |

#!/bin/sh

# pass domain as argument

curl -s -k -H 'Authorization: sso-key *******8m_PwFAffjiNmiCUrKe******:**FF73L********' 'https://api.godaddy.com/v1/domains/available?domain='$1'&checkType=FULL&forTransfer=false'

I found that my expiring domain produced different results about five days after expiring if I used checkType of FAST versus checkType of FULL – and FAST was wrong. So I learned you have to use FULL to get an answer you can trust!

Example usage of an available domain

$ ./available.sh dr-johnstechtalk.com

{"available":true,"domain":"dr-johnstechtalk.com","definitive":false,"price":11990000,"currency":"USD","period":1} |

{"available":true,"domain":"dr-johnstechtalk.com","definitive":false,"price":11990000,"currency":"USD","period":1}

2nd example – a non-available domain

$ ./available.sh drjohnstechtalk.com

{"available":false,"domain":"drjohnstechtalk.com","definitive":true,"price":11990000,"currency":"USD","period":1} |

{"available":false,"domain":"drjohnstechtalk.com","definitive":true,"price":11990000,"currency":"USD","period":1}

Example JSON file

I had to do a lot of search and replace to preserve my anonymity, but I feel this post wouldn’t be complete without showing the real contents of my JSON file I am using for both validate, and, hopefully, as the basis for my API-driven domain purchase:

{

"domain": "dr-johnstechtalk.com",

"renewAuto": true,

"privacy": false,

"nameServers": [

],

"consent": {

"agreementKeys": ["DNRA"],

"agreedBy": "50.17.188.196",

"agreedAt": "2016-09-29T16:00:00Z"

},

"period": 1,

"contactAdmin": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

},

"phone": "+1.5555551212"

},

"contactBilling": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

},

"phone": "+1.5555551212"

},

"contactRegistrant": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"phone": "+1.5555551212",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

}

},

"contactTech": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"phone": "+1.5555551212",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

}

}

} |

{

"domain": "dr-johnstechtalk.com",

"renewAuto": true,

"privacy": false,

"nameServers": [

],

"consent": {

"agreementKeys": ["DNRA"],

"agreedBy": "50.17.188.196",

"agreedAt": "2016-09-29T16:00:00Z"

},

"period": 1,

"contactAdmin": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

},

"phone": "+1.5555551212"

},

"contactBilling": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

},

"phone": "+1.5555551212"

},

"contactRegistrant": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"phone": "+1.5555551212",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

}

},

"contactTech": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"phone": "+1.5555551212",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

}

}

}

Note the agreementkeys value: DNRA. GoDaddy doesn’t document this very well, but that is what you need to put there! Note also that the nameservers are left empty. I asked GoDaddy and that is what they advised to do. The other values are pretty much what you’d expect. I used my own server’s IP address for agreedBy – use your own IP. I don’t know how important it is to get the agreedAt date close to the current time. I’m going to assume it should be within 24 hours of the current time.

How do we test this JSON input file? I wrote a validate script for that I call validate.sh.

#!/bin/sh

# DrJ 9/2016

# godaddy-json-register was built using GoDaddy's documentation at https://developer.godaddy.com/doc#!/_v1_domains/validate

jsondata=`tr -d '\n' < godaddy-json-register`

curl -i -k -H 'Authorization: sso-key *******8m_PwFAffjiNmiCUrKe******:**FF73L********' -H 'Content-Type: application/json' -H 'Accept: application/json' -d "$jsondata" https://api.godaddy.com/v1/domains/purchase/validate |

#!/bin/sh

# DrJ 9/2016

# godaddy-json-register was built using GoDaddy's documentation at https://developer.godaddy.com/doc#!/_v1_domains/validate

jsondata=`tr -d '\n' < godaddy-json-register`

curl -i -k -H 'Authorization: sso-key *******8m_PwFAffjiNmiCUrKe******:**FF73L********' -H 'Content-Type: application/json' -H 'Accept: application/json' -d "$jsondata" https://api.godaddy.com/v1/domains/purchase/validate

Run the validate script

$ ./validate.sh

HTTP/1.1 100 Continue

HTTP/1.1 100 Continue

Via: 1.1 api.godaddy.com

HTTP/1.1 200 OK

Date: Thu, 29 Sep 2016 20:11:33 GMT

X-Powered-By: Express

Vary: Origin,Accept-Encoding

Access-Control-Allow-Credentials: true

Content-Type: application/json; charset=utf-8

ETag: W/"2-mZFLkyvTelC5g8XnyQrpOw"

Via: 1.1 api.godaddy.com

Transfer-Encoding: chunked |

HTTP/1.1 100 Continue

HTTP/1.1 100 Continue

Via: 1.1 api.godaddy.com

HTTP/1.1 200 OK

Date: Thu, 29 Sep 2016 20:11:33 GMT

X-Powered-By: Express

Vary: Origin,Accept-Encoding

Access-Control-Allow-Credentials: true

Content-Type: application/json; charset=utf-8

ETag: W/"2-mZFLkyvTelC5g8XnyQrpOw"

Via: 1.1 api.godaddy.com

Transfer-Encoding: chunked

Revised versions of the above scripts

So we can pass the domain name as argument I revised all the scripts. Also, I provide an agreeddAt date which is current.

The data file: godaddy-json-register

{

"domain": "DOMAIN",

"renewAuto": true,

"privacy": false,

"nameServers": [

],

"consent": {

"agreementKeys": ["DNRA"],

"agreedBy": "50.17.188.196",

"agreedAt": "DATE"

},

"period": 1,

"contactAdmin": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

},

"phone": "+1.5555551212"

},

"contactBilling": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

},

"phone": "+1.5555551212"

},

"contactRegistrant": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"phone": "+1.5555551212",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

}

},

"contactTech": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"phone": "+1.5555551212",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

}

}

} |

{

"domain": "DOMAIN",

"renewAuto": true,

"privacy": false,

"nameServers": [

],

"consent": {

"agreementKeys": ["DNRA"],

"agreedBy": "50.17.188.196",

"agreedAt": "DATE"

},

"period": 1,

"contactAdmin": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

},

"phone": "+1.5555551212"

},

"contactBilling": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

},

"phone": "+1.5555551212"

},

"contactRegistrant": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"phone": "+1.5555551212",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

}

},

"contactTech": {

"nameFirst": "Dr","nameLast": "John",

"email": "dr-john@gmail.com",

"phone": "+1.5555551212",

"addressMailing": {

"address1": "555 Piney Drive",

"city": "Smallville","state": "New Jersey","postalCode": "55555",

"country": "US"

}

}

}

validate.sh

#!/bin/sh

# DrJ 10/2016

# godaddy-json-register was built using GoDaddy's documentation at https://developer.godaddy.com/doc#!/_v1_domains/validate

# pass domain as argument

# get date into accepted format

domain=$1

date=`date -u --rfc-3339=seconds|sed 's/ /T/'|sed 's/+.*/Z/'`

jsondata=`tr -d '\n' < godaddy-json-register`

jsondata=`echo $jsondata|sed 's/DATE/'$date'/'`

jsondata=`echo $jsondata|sed 's/DOMAIN/'$domain'/'`

#echo date is $date

#echo jsondata is $jsondata

curl -i -k -H 'Authorization: sso-key *******8m_PwFAffjiNmiCUrKe******:**FF73L********' -H 'Content-Type: application/json' -H 'Accept: application/json' -d "$jsondata" https://api.godaddy.com/v1/domains/purchase/validate |

#!/bin/sh

# DrJ 10/2016

# godaddy-json-register was built using GoDaddy's documentation at https://developer.godaddy.com/doc#!/_v1_domains/validate

# pass domain as argument

# get date into accepted format

domain=$1

date=`date -u --rfc-3339=seconds|sed 's/ /T/'|sed 's/+.*/Z/'`

jsondata=`tr -d '\n' < godaddy-json-register`

jsondata=`echo $jsondata|sed 's/DATE/'$date'/'`

jsondata=`echo $jsondata|sed 's/DOMAIN/'$domain'/'`

#echo date is $date

#echo jsondata is $jsondata

curl -i -k -H 'Authorization: sso-key *******8m_PwFAffjiNmiCUrKe******:**FF73L********' -H 'Content-Type: application/json' -H 'Accept: application/json' -d "$jsondata" https://api.godaddy.com/v1/domains/purchase/validate

available.sh

No change. See listing above.

purchase.sh

Exact same as validate.sh, with just a slightly different URL. I need to test that it really works, but based on my reading I think it will.

#!/bin/sh

# DrJ 10/2016

# godaddy-json-register was built using GoDaddy's documentation at https://developer.godaddy.com/doc#!/_v1_domains/purchase

# pass domain as argument

# get date into accepted format

domain=$1

date=`date -u --rfc-3339=seconds|sed 's/ /T/'|sed 's/+.*/Z/'`

jsondata=`tr -d '\n' < godaddy-json-register`

jsondata=`echo $jsondata|sed 's/DATE/'$date'/'`

jsondata=`echo $jsondata|sed 's/DOMAIN/'$domain'/'`

#echo date is $date

#echo jsondata is $jsondata

curl -s -i -k -H 'Authorization: sso-key *******8m_PwFAffjiNmiCUrKe******:**FF73L********' -H 'Content-Type: application/json' -H 'Accept: application/json' -d "$jsondata" https://api.godaddy.com/v1/domains/purchase |

#!/bin/sh

# DrJ 10/2016

# godaddy-json-register was built using GoDaddy's documentation at https://developer.godaddy.com/doc#!/_v1_domains/purchase

# pass domain as argument

# get date into accepted format

domain=$1

date=`date -u --rfc-3339=seconds|sed 's/ /T/'|sed 's/+.*/Z/'`

jsondata=`tr -d '\n' < godaddy-json-register`

jsondata=`echo $jsondata|sed 's/DATE/'$date'/'`

jsondata=`echo $jsondata|sed 's/DOMAIN/'$domain'/'`

#echo date is $date

#echo jsondata is $jsondata

curl -s -i -k -H 'Authorization: sso-key *******8m_PwFAffjiNmiCUrKe******:**FF73L********' -H 'Content-Type: application/json' -H 'Accept: application/json' -d "$jsondata" https://api.godaddy.com/v1/domains/purchase

Putting it all together

Here’s a looping script I call loop.pl. I switched to perl because it’s easier to do certain control operations.

#!/usr/bin/perl

#DrJ 10/2016

$DEBUG = 0;

$status = 0;

open STDOUT, '>', "loop.log" or die "Can't redirect STDOUT: $!";

open STDERR, ">&STDOUT" or die "Can't dup STDOUT: $!";

select STDERR; $| = 1; # make unbuffered

select STDOUT; $| = 1; # make unbuffered

# edit this and change to your about-to-expire domain

$domain = "dr-johnstechtalk.com";

while ($status != 200) {

# show that we're alive and working...

print "Now it's ".`date` if $i++ % 10 == 0;

$hr = `date +%H`;

chomp($hr);

# run loop more aggressively during times of day we think Network Solutions releases domains back to the pool, esp. around 2 - 3 PM EST

$sleep = $hr > 11 && $hr < 16 ? 1 : 15;

print "Hr,sleep: $hr,$sleep\n" if $DEBUG;

$availRes = `./available.sh $domain`;

# {"available":true,"domain":"dr-johnstechtalk.com","definitive":false,"price":11990000,"currency":"USD","period":1}

print "$availRes\n" if $DEBUG;

($available) = $availRes =~ /^\{"available":([^,]+),/;

print "$available\n" if $DEBUG;

if ($available eq "false") {

print "test comparison OP for false result\n" if $DEBUG;

} elsif ($available eq "true") {

# available value of true is extremely unreliable with many false positives. Confirm availability by making a 2nd call

print "available.sh results: $availRes\n";

$availRes = `./available.sh $domain`;

print "available.sh re-test results: $availRes\n";

($available2) = $availRes =~ /^\{"available":([^,]+),/;

next if $available2 eq "false";

# We got two available eq true results in a row so let's try to buy it!

print "$domain is very likely available. Trying to buy it at ".`date`;

open(BUY,"./purchase.sh $domain|") || die "Cannot run ./purchase.pl $domain!!\n";

while() {

# print out each line so we can analyze what happened

print ;

# we got it if we got back

# HTTP/1.1 200 OK

if (/1.1 200 OK/) {

print "We just bought $domain at ".`date`;

$status = 200;

}

} # end of loop over results of purchase

close(BUY);

print "\n";

exit if $status == 200;

} else {

print "available is neither false nor true: $available\n";

}

sleep($sleep);

} |

#!/usr/bin/perl

#DrJ 10/2016

$DEBUG = 0;

$status = 0;

open STDOUT, '>', "loop.log" or die "Can't redirect STDOUT: $!";

open STDERR, ">&STDOUT" or die "Can't dup STDOUT: $!";

select STDERR; $| = 1; # make unbuffered

select STDOUT; $| = 1; # make unbuffered

# edit this and change to your about-to-expire domain

$domain = "dr-johnstechtalk.com";

while ($status != 200) {

# show that we're alive and working...

print "Now it's ".`date` if $i++ % 10 == 0;

$hr = `date +%H`;

chomp($hr);

# run loop more aggressively during times of day we think Network Solutions releases domains back to the pool, esp. around 2 - 3 PM EST

$sleep = $hr > 11 && $hr < 16 ? 1 : 15;

print "Hr,sleep: $hr,$sleep\n" if $DEBUG;

$availRes = `./available.sh $domain`;

# {"available":true,"domain":"dr-johnstechtalk.com","definitive":false,"price":11990000,"currency":"USD","period":1}

print "$availRes\n" if $DEBUG;

($available) = $availRes =~ /^\{"available":([^,]+),/;

print "$available\n" if $DEBUG;

if ($available eq "false") {

print "test comparison OP for false result\n" if $DEBUG;

} elsif ($available eq "true") {

# available value of true is extremely unreliable with many false positives. Confirm availability by making a 2nd call

print "available.sh results: $availRes\n";

$availRes = `./available.sh $domain`;

print "available.sh re-test results: $availRes\n";

($available2) = $availRes =~ /^\{"available":([^,]+),/;

next if $available2 eq "false";

# We got two available eq true results in a row so let's try to buy it!

print "$domain is very likely available. Trying to buy it at ".`date`;

open(BUY,"./purchase.sh $domain|") || die "Cannot run ./purchase.pl $domain!!\n";

while() {

# print out each line so we can analyze what happened

print ;

# we got it if we got back

# HTTP/1.1 200 OK

if (/1.1 200 OK/) {

print "We just bought $domain at ".`date`;

$status = 200;

}

} # end of loop over results of purchase

close(BUY);

print "\n";

exit if $status == 200;

} else {

print "available is neither false nor true: $available\n";

}

sleep($sleep);

}

Running the loop script

$ nohup ./loop.pl > loop.log 2>&1 &

Stopping the loop script

$ kill ‐9 %1

Description of loop.pl

I gotta say this loop script started out as a much simpler script. I fortunately started on it many days before my desired domain actually became available so I got to see and work out all the bugs. Contributing to the problem is that GoDaddy’s API results are quite unreliable. I was seeing a lot of false positives – almost 20%. So I decided to require two consecutive calls to available.sh to return true. I could have required available true and definitive true, but I’m afraid that will make me late to the party. The API is not documented to that level of detail so there’s no help there. But so far what I have seen is that when available incorrectly returns true, simultaneously definitive becomes false, whereas all other times definitive is true.

Results of running an earlier and simpler version of loop.pl

This shows all manner of false positives. But at least it never allowed me to buy the domain when it wasn’t available.

Now it's Wed Oct 5 15:20:01 EDT 2016

Now it's Wed Oct 5 15:20:19 EDT 2016

Now it's Wed Oct 5 15:20:38 EDT 2016

available.sh results: {"available":true,"domain":"dr-johnstechtalk.com","definitive":false,"price":11990000,"currency":"USD","period":1}

dr-johnstechtalk.com is available. Trying to buy it at Wed Oct 5 15:20:46 EDT 2016

HTTP/1.1 100 Continue

HTTP/1.1 100 Continue

Via: 1.1 api.godaddy.com

HTTP/1.1 422 Unprocessable Entity

Date: Wed, 05 Oct 2016 19:20:47 GMT

X-Powered-By: Express

Vary: Origin,Accept-Encoding

Access-Control-Allow-Credentials: true

Content-Type: application/json; charset=utf-8

ETag: W/"7d-O5Dw3WvJGo8h30TqR7j8zg"

Via: 1.1 api.godaddy.com

Transfer-Encoding: chunked

{"code":"UNAVAILABLE_DOMAIN","message":"The specified `domain` (dr-johnstechtalk.com) isn't available for purchase","name":"ApiError"}

Now it's Wed Oct 5 15:20:58 EDT 2016

Now it's Wed Oct 5 15:21:16 EDT 2016

Now it's Wed Oct 5 15:21:33 EDT 2016

available.sh results: {"available":true,"domain":"dr-johnstechtalk.com","definitive":false,"price":11990000,"currency":"USD","period":1}

dr-johnstechtalk.com is available. Trying to buy it at Wed Oct 5 15:21:34 EDT 2016

HTTP/1.1 100 Continue

HTTP/1.1 100 Continue

Via: 1.1 api.godaddy.com

HTTP/1.1 422 Unprocessable Entity

Date: Wed, 05 Oct 2016 19:21:36 GMT

X-Powered-By: Express

Vary: Origin,Accept-Encoding

Access-Control-Allow-Credentials: true

Content-Type: application/json; charset=utf-8

ETag: W/"7d-O5Dw3WvJGo8h30TqR7j8zg"

Via: 1.1 api.godaddy.com

Transfer-Encoding: chunked

{"code":"UNAVAILABLE_DOMAIN","message":"The specified `domain` (dr-johnstechtalk.com) isn't available for purchase","name":"ApiError"}

Now it's Wed Oct 5 15:21:55 EDT 2016

Now it's Wed Oct 5 15:22:12 EDT 2016

Now it's Wed Oct 5 15:22:30 EDT 2016

available.sh results: {"available":true,"domain":"dr-johnstechtalk.com","definitive":false,"price":11990000,"currency":"USD","period":1}

dr-johnstechtalk.com is available. Trying to buy it at Wed Oct 5 15:22:30 EDT 2016

... |

Now it's Wed Oct 5 15:20:01 EDT 2016

Now it's Wed Oct 5 15:20:19 EDT 2016

Now it's Wed Oct 5 15:20:38 EDT 2016

available.sh results: {"available":true,"domain":"dr-johnstechtalk.com","definitive":false,"price":11990000,"currency":"USD","period":1}

dr-johnstechtalk.com is available. Trying to buy it at Wed Oct 5 15:20:46 EDT 2016

HTTP/1.1 100 Continue

HTTP/1.1 100 Continue

Via: 1.1 api.godaddy.com

HTTP/1.1 422 Unprocessable Entity

Date: Wed, 05 Oct 2016 19:20:47 GMT

X-Powered-By: Express

Vary: Origin,Accept-Encoding

Access-Control-Allow-Credentials: true

Content-Type: application/json; charset=utf-8

ETag: W/"7d-O5Dw3WvJGo8h30TqR7j8zg"

Via: 1.1 api.godaddy.com

Transfer-Encoding: chunked

{"code":"UNAVAILABLE_DOMAIN","message":"The specified `domain` (dr-johnstechtalk.com) isn't available for purchase","name":"ApiError"}

Now it's Wed Oct 5 15:20:58 EDT 2016

Now it's Wed Oct 5 15:21:16 EDT 2016

Now it's Wed Oct 5 15:21:33 EDT 2016

available.sh results: {"available":true,"domain":"dr-johnstechtalk.com","definitive":false,"price":11990000,"currency":"USD","period":1}

dr-johnstechtalk.com is available. Trying to buy it at Wed Oct 5 15:21:34 EDT 2016

HTTP/1.1 100 Continue

HTTP/1.1 100 Continue

Via: 1.1 api.godaddy.com

HTTP/1.1 422 Unprocessable Entity

Date: Wed, 05 Oct 2016 19:21:36 GMT

X-Powered-By: Express

Vary: Origin,Accept-Encoding

Access-Control-Allow-Credentials: true

Content-Type: application/json; charset=utf-8

ETag: W/"7d-O5Dw3WvJGo8h30TqR7j8zg"

Via: 1.1 api.godaddy.com

Transfer-Encoding: chunked

{"code":"UNAVAILABLE_DOMAIN","message":"The specified `domain` (dr-johnstechtalk.com) isn't available for purchase","name":"ApiError"}

Now it's Wed Oct 5 15:21:55 EDT 2016

Now it's Wed Oct 5 15:22:12 EDT 2016

Now it's Wed Oct 5 15:22:30 EDT 2016

available.sh results: {"available":true,"domain":"dr-johnstechtalk.com","definitive":false,"price":11990000,"currency":"USD","period":1}

dr-johnstechtalk.com is available. Trying to buy it at Wed Oct 5 15:22:30 EDT 2016

...

These results show why i had to further refine the script to reduce the frequent false positives.

Review

What have we done? Our looping script, loop.pl, loops more aggressively during the time of day we think Network Solutions releases expired .com domains (around 2 PM EST). But just in case we’re wrong about that we’ll run it anyway at all hours of the day, but just not as quickly. So during the aggressive period we sleep just one second between calls to available.sh. When the domain finally does become available we call purchase.sh on it and exit and we write some timestamps and the domain we’ve just registered to our log file.

Performance

Miserable. This API seems tuned for relative ease-of-use, not speed. The validate call often takes, oh, say 40 seconds to return! I’m sure the purchase call will be no different. For a domainer that’s a lifetime. So any strategy that relies on speed had better turn to a registrar that’s tuned for it. GoDaddy I think is more aiming at resellers of their services.

Don’t have a linux environment handy?

Of course I’m using my own Amazon AWS server for this, but that needn’t be a barrier to entry. I could have used one of my Raspberry Pi’s. Probably even Cygwin on a Windows PC could be made to work.

Appendix A

How to remove all newline characters from your JSON file

Let’s say you have a nice JSON file which was created for you from the Documentation exercises called godaddy-json-register. It will contain lots of newline (“\n”) characters, assuming you’re using a Linux server. Remove them and put the output into a file called compact-json:

$ tr ‐d ‘\n'<godaddy‐json‐register>compact‐json

I like this because then I can still use curl rather than wget to make my API calls.

Appendix B

What an expiring domain looks like in whois

Run this from a linux server

$ whois <expiring‐domain.com>

Domain Name: expiring-domain.com

...

Creation Date: 2010-09-28T15:55:56Z

Registrar Registration Expiration Date: 2016-09-27T21:00:00Z

...

Domain Status: clientDeleteHold

Domain Status: clientDeleteProhibited

Domain Status: clientTransferProhibited

... |

Domain Name: expiring-domain.com

...

Creation Date: 2010-09-28T15:55:56Z

Registrar Registration Expiration Date: 2016-09-27T21:00:00Z

...

Domain Status: clientDeleteHold

Domain Status: clientDeleteProhibited

Domain Status: clientTransferProhibited

...

You see that Domain Status: clientDeleteHold? You don’t get that for regular domains whose registration is still good. They’ll usually have the two lines I show below that, but not that one. This is shown for my desired domain just a few days after its official expiration date.

2020 update

Four years later, still hunting for that domain – I am very patient! So I dusted off the program described here. Suprisingly, it all still works. Except maybe the JSON file. The onlything wrong with that was the lack of nameservers. I added some random GoDaddy nameservers and it seemed all good.

Conclusion

We show that GoDaddy’s API works and we provide simple scripts which can automate what is known as domain dropcatching. This approach should attempt to register a domain within a cople seconds of its being released – if we’ve done everything right. The GoDaddy API results are a little unstable however.

References and related

If you don’t mind paying, Fabulous.com has a domain drop catching service.

ICANN’s web site with the domain lifecycle infographic.

GoDaddy’s API documentation: http://developer.godaddy.com/

More about Raspberry Pi: http://raspberrypi.org/

I really wouldn’t bother with Cygwin – just get your hands on real Linux environment.

Curious about some of the curl options I used? Just run curl ‐‐help. I left out the description of the switches I use because it didn’t fit into the narrative.

Something about my Amazon AWS experience from some years ago.

All the perl tricks I used would take another blog post to explain. It’s just stuff I learned over the years so it didn’t take much time at all.

People who buy and sell domains for a living are called domainers. They are professionals and my guide will not make you competitive with them.